Introduction

Artificial Intelligence (AI) and Machine Learning (ML) are transforming modern applications, enabling intelligent automation, predictive analytics, and personalized experiences. Google Cloud Platform (GCP) provides a robust ecosystem of AI and ML tools, including TensorFlow, AutoML, and Vertex AI, that cater to both beginner and advanced data scientists.

At Curiosity Tech, engineers gain hands-on experience with these tools to design, train, deploy, and scale ML models efficiently while following cloud-native best practices.

Why AI & ML on GCP?

GCP provides a fully managed infrastructure, eliminating the need for manual setup of GPUs, TPUs, or distributed training environments. Some core benefits include:

- Scalability: Train models on large datasets without infrastructure bottlenecks.

- Ease of Use: AutoML allows non-experts to build models with minimal coding.

- Integration: Seamlessly connect ML models to other GCP services like BigQuery, Cloud Storage, and Cloud Functions.

- Cost Efficiency: Pay only for compute and storage resources used.

GCP bridges the gap between data analysis and intelligent application development, making AI/ML accessible for cloud engineers and developers alike.

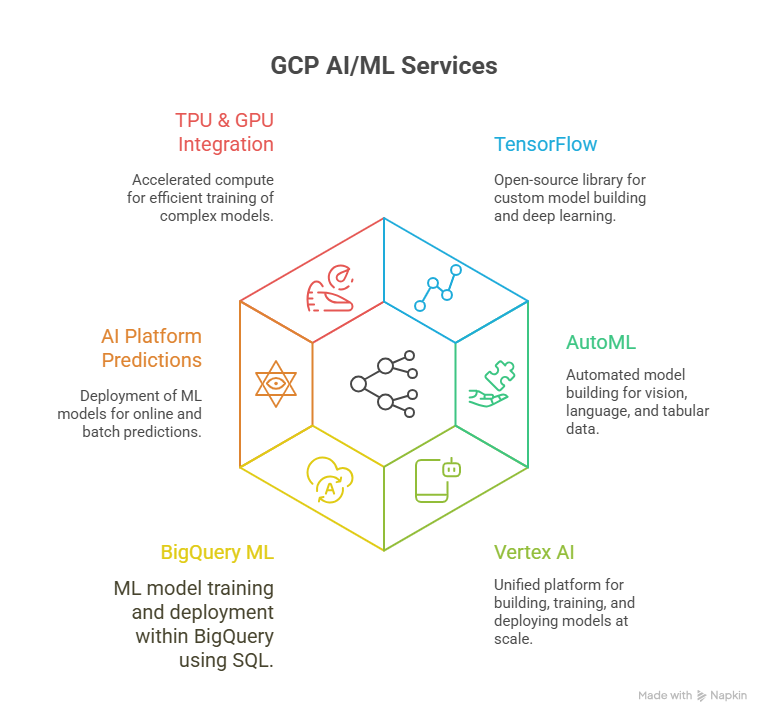

Core AI & ML Services in GCP

| Service | Purpose |

| TensorFlow | Open-source ML library for building custom models and deep learning. |

| AutoML | Build models automatically with minimal coding for vision, language, and tabular data. |

| Vertex AI | Unified ML platform for building, training, and deploying models at scale. |

| BigQuery ML | Train and deploy ML models directly inside BigQuery using SQL queries. |

| AI Platform Predictions | Deploy ML models for online or batch predictions. |

| TPU & GPU Integration | Accelerated compute for training complex models efficiently. |

Diagram Concept: GCP AI/ML Workflow

TensorFlow on GCP

TensorFlow is ideal for custom model development. With GCP:

- Compute Options: Use Compute Engine, GKE, or Vertex AI for distributed training.

- TPU Support: Accelerate deep learning model training significantly.

- Integration: Connect TensorFlow models to BigQuery or Cloud Storage for seamless data access.

- Deployment: Export models to Vertex AI for production-ready serving.

Example: Image classification using TensorFlow and Cloud Storage:

- Upload dataset to Cloud Storage.

- Preprocess images using TensorFlow pipelines.

- Train a CNN model on a TPU or GPU instance.

- Evaluate and export model for deployment.

AutoML on GCP

AutoML allows engineers without extensive ML expertise to build high-performing models.

- AutoML Vision: Detect objects, classify images, and extract insights.

- AutoML Natural Language: Sentiment analysis, entity extraction, and text classification.

- AutoML Tables: Predict outcomes from structured/tabular data.

Benefits:

- Minimal coding required

- Automated hyperparameter tuning

- Built-in evaluation metrics and dashboards

Example: Predict customer churn from tabular data:

- Upload customer dataset to AutoML Tables.

- Select target variable (churn).

- AutoML automatically trains multiple models, optimizes hyperparameters, and selects the best-performing model.

- Deploy for predictions directly or via API.

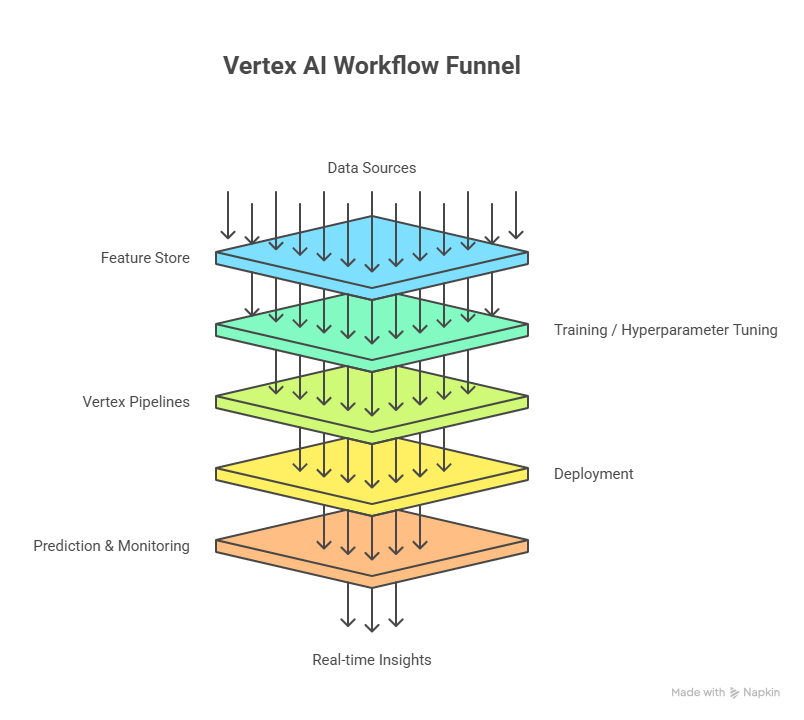

Vertex AI Overview

Vertex AI unifies data preparation, training, deployment, and monitoring into a single platform.

Core Features:

- Vertex Pipelines: Automate ML workflows end-to-end.

- Custom Training: Run TensorFlow, PyTorch, or Scikit-Learn models.

- Managed Endpoints: Deploy models for online predictions with auto-scaling.

- Feature Store: Reuse features across multiple models for consistency.

- Model Monitoring: Track drift, performance degradation, and bias over time.

Diagram Concept: Vertex AI Components

Practical Example: Deploying an ML Model with Vertex AI

Scenario: Predict real estate prices based on property features.

- Data Preparation: Upload historical property data to BigQuery.

- Feature Engineering: Use Vertex AI Feature Store to standardize and store features.

- Training: Train custom regression model using TensorFlow on Vertex AI.

- Evaluation: Use metrics like RMSE, R² to select best model.

- Deployment: Deploy model to managed endpoint for real-time predictions.

- Monitoring: Track prediction accuracy and data drift using Vertex AI monitoring tools.

Best Practices for AI & ML on GCP

- Use Managed Services: Leverage AutoML and Vertex AI to reduce operational overhead.

- Optimize Data Storage: Store datasets in Cloud Storage or BigQuery for efficient access.

- Use GPUs/TPUs Wisely: Only provision accelerated compute for large models.

- Version Control Models: Maintain model versions in Vertex AI for reproducibility.

- Monitor Performance: Continuously track prediction accuracy and feature drift.

- Ensure Security: Use IAM roles, VPC Service Controls, and encryption for sensitive datasets.

Advanced Techniques

- Hyperparameter Tuning: Use Vertex AI Vizier for automated optimization.

- Explainable AI: Understand model decisions with feature attribution.

- Federated Learning: Train models across distributed datasets without sharing raw data.

- Integration with Pipelines: Automate end-to-end workflows using Vertex AI Pipelines.

Conclusion

AI and ML on GCP empower engineers to build intelligent, scalable, and production-ready applications. By mastering TensorFlow, AutoML, and Vertex AI, engineers can handle everything from custom model development to automated workflows, predictions, and monitoring.

At Curiosity Tech, hands-on AI/ML labs guide engineers through real-world projects, preparing them for enterprise-grade solutions, cloud certifications, and AI-driven innovation.