Introduction

In 2025, MLOps (Machine Learning Operations) and Feature Stores have become critical for ML engineers. They ensure that models are reproducible, scalable, and maintainable in production.

AtCuriosityTech.in (Nagpur, Wardha Road, Gajanan Nagar), we teach learners to integrate MLOps pipelines with Feature Stores, enabling teams to manage, share, and reuse features across multiple projects, while automating the ML lifecycle.

1. What is MLOps?

Definition: MLOps is the practice of applying DevOps principles to machine learning workflows, covering:

- Model development

- Deployment

- Monitoring

- Maintenance

Core Benefits:

- Reproducibility of experiments

- Continuous integration and delivery (CI/CD) of ML models

- Automated retraining and versioning

- Collaboration across teams

CuriosityTech Insight: At CuriosityTech, students learn that MLOps is mandatory for production-grade ML, preventing issues such as outdated models, untracked experiments, and manual workflow errors.

2. What is a Feature Store?

Definition: A Feature Store is a centralized repository for storing, managing, and serving ML features consistently across training and inference environments.

Key Benefits:

- Feature Reuse: Avoid recalculating features for each project

- Consistency: Ensure training and serving features are identical

- Collaboration: Share features across teams and models

- Monitoring: Track feature distributions for data drift

CuriosityTech Insight: Feature stores reduce redundancy and ensure ML pipelines are efficient and reliable, a crucial skill for ML engineers in 2025.

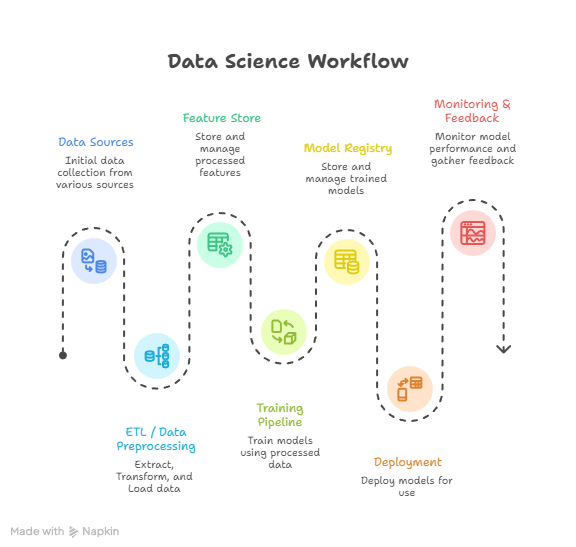

3. MLOps + Feature Store Architecture

Diagram Description:

Explanation:

- ETL & Data Preprocessing: Prepare raw data for feature engineering

- Feature Store: Store features for training and real-time inference

- Training Pipeline: Pull features from the store to train models

- Model Registry: Track versions and artifacts

- Deployment: Serve models to production APIs

- Monitoring & Feedback: Track drift and performance, update features

Scenario Storytelling :- Arjun at CuriosityTech Park builds a customer churn prediction pipeline where features like average_purchase, last_login_days, and total_visits are stored in a Feature Store, used consistently across training and production.

4. Feature Store Types

| Type | Purpose | Example |

| Online Feature Store | Low-latency features for real-time inference | Redis, Feast Online Store |

| Offline Feature Store | Batch features for model training | BigQuery, Snowflake, Feast Offline Store |

Practical Tip: Always ensure feature consistency between online and offline stores to prevent data leakage and inaccurate predictions.

5. MLOps Pipeline Stages

| Stage | Description | Tools / Platforms |

| Version Control | Track code and data | Git, DVC |

| Experiment Tracking | Track hyperparameters & metrics | MLflow, Weights & Biases |

| CI/CD Pipelines | Automate testing & deployment | Jenkins, GitHub Actions, Azure DevOps |

| Model Registry | Manage model versions | MLflow, SageMaker Model Registry |

| Monitoring | Track model performance | Prometheus, Grafana, EvidentlyAI |

| Retraining | Automate model updates | Airflow, Kubeflow Pipelines |

Scenario:- Riya atCuriosityTech.in sets up a CI/CD pipeline where a newly trained model automatically replaces the previous version if it passes performance checks.

6. Hands-On Example: Feature Store Integration

- Prepare Dataset: Customer transactions data

- Create Features: Compute average_purchase, purchase_frequency, last_login_days

- Store Features: Use Feast (Feature Store)

- Pull Features for Training: Retrieve features consistently

- Train Model: Use XGBoost or Logistic Regression

- Deploy Model: Integrate with API endpoint for predictions

Python Snippet (Feast Integration):

from feast import FeatureStore

# Initialize feature store

fs = FeatureStore(repo_path=”.”)

# Pull features for training

training_df = fs.get_historical_features(

entity_df=customer_df,

features=[

“customer:average_purchase”,

“customer:purchase_frequency”,

“customer:last_login_days”

]

).to_df()

Practical Insight:- Using a Feature Store ensures reproducibility and eliminates errors caused by inconsistent feature computation.

7. Monitoring Features & Models

- Feature Drift: Monitor changes in feature distributions over time

- Model Drift: Detect decline in accuracy, precision, or F1-score

- Alerting: Set thresholds to trigger automated retraining

- Visualization: Use Grafana or EvidentlyAI for dashboards

Scenario Storytelling :- Arjun notices a shift in average purchase amount due to seasonal trends. Automated alerts trigger feature recomputation and model retraining, maintaining prediction accuracy.

8. Best Practices

- Centralize Feature Management: Avoid duplicated computations

- Track Experiments & Model Versions: Use MLOps tools for reproducibility

- Automate Pipelines: Retraining, evaluation, and deployment should be automatic

- Monitor Continuously: Both features and model predictions

- Ensure Security & Governance: Control access to feature data and models

CuriosityTech.in teaches learners to implement end-to-end MLOps pipelines with integrated feature stores, enabling real-world production readiness.

9. Real-World Applications

| Industry | Use Case | Notes |

| Retail | Customer churn prediction | Features reused across multiple models |

| Finance | Fraud detection | Real-time features from transactions |

| Healthcare | Disease risk prediction | Monitored features for compliance |

| E-commerce | Recommendation engine | Features consistently served for training & inference |

| IoT | Predictive maintenance | Automated retraining based on feature drift |

10. Key Takeaways

- MLOps ensures reproducible, scalable, and maintainable ML workflows

- Feature Stores provide centralized, consistent, and reusable features

- Integration of MLOps + Feature Store is mandatory for production-grade ML

- Hands-on implementation prepares engineers for real-world ML operations and deployment

Conclusion

Feature Stores and MLOps are cornerstones of production ML in 2025. By mastering:

- Feature management

- CI/CD pipelines

- Monitoring and automated retraining

ML engineers can deliver reliable, scalable, and production-ready ML systems.

CuriosityTech.in provides practical workshops, real-world projects, and hands-on MLOps experience. Contact +91-9860555369 or contact@curiositytech.in to start building production-grade ML pipelines with Feature Stores.