Introduction

Self-driving cars represent one of the most complex and transformative applications of AI and deep learning. These vehicles rely on computer vision, sensor fusion, reinforcement learning, and decision-making algorithms to navigate safely.

At Curiosity Tech, learners in Nagpur explore end-to-end autonomous vehicle pipelines, gaining hands-on experience in deep learning architectures, simulation environments, and real-world deployment considerations, preparing them for cutting-edge AI engineering careers.

1. Overview of Self-Driving Car Systems

Self-driving cars rely on multiple AI and sensor modules:

| Module | Function |

| Perception | Detects objects, lanes, traffic signs using cameras, LIDAR, radar |

| Localization | Determines vehicle position using GPS, IMU, and map data |

| Prediction | Anticipates actions of other road users |

| Planning | Determines optimal path and maneuvers |

| Control | Executes steering, acceleration, braking commands |

CuriosityTech Insight: Students learn that perception and planning rely heavily on deep learning, while control systems combine classical and AI techniques.

2. Deep Learning Architectures for Autonomous Vehicles

a) Convolutional Neural Networks (CNNs)

- Used for lane detection, traffic sign recognition, and object detection

- Popular models: YOLOv5, Faster R-CNN, SSD

Example: Detecting pedestrians in real-time using a CNN with input from the front-facing camera.

b) Recurrent Neural Networks (RNNs) / LSTMs

- Used for temporal prediction, e.g., predicting pedestrian movement or vehicle trajectories.

- Helps vehicles anticipate actions in dynamic environments

c) Reinforcement Learning

- Optimizes decision-making policies for path planning and adaptive control

- Models learn optimal navigation strategies through simulated trials

d) Sensor Fusion Networks

- Combine camera, LIDAR, and radar data for robust perception

- Example: FusionNet or PointPillars architectures for 3D object detection

3. Training Data and Simulation

- High-quality datasets: KITTI, Waymo Open Dataset, nuScenes

- Simulation platforms: CARLA, AirSim for safe experimentation

- Data Preprocessing:

- Normalize images

- Annotate objects with bounding boxes

- Generate diverse weather and traffic scenarios

Human Story : A CuriosityTech learner started with a lane detection CNN, then gradually added object detection modules, realizing the importance of large, annotated datasets for real-world performance.

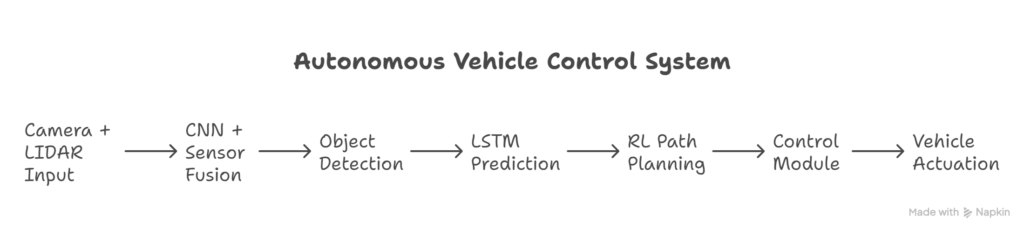

4. Case Study Example: End-to-End Pipeline

- Input : Camera images + LIDAR point clouds

- Perception Module : CNN + LIDAR fusion detects cars, pedestrians, lanes.

- Prediction Module : LSTM predicts trajectories of surrounding vehicles.

- Planning Module : Deep Q-Learning algorithm selects optimal path.

- Control Module : PID controller executes steering and speed.

Textual Pipeline Diagram:

Observation: Students at CuriosityTech see the incremental improvement of each module, emphasizing modular design and iterative testing.

5. Challenges in Self-Driving Car AI

| Challenge | Description |

| Real-Time Processing | Must process large amounts of sensor data in milliseconds |

| Edge Cases | Rare events like animals crossing or sudden traffic jams |

| Sensor Noise | LIDAR/radar inaccuracies in rain, fog, or snow |

| Safety & Reliability | Critical to prevent accidents |

| Regulatory Compliance | Must meet regional safety and legal standards |

CuriosityTech Tip: Learners practice scenario-based testing in simulators to handle edge cases safely before real-world trials.

6. Career Insights

- AI engineers for self-driving cars must master:

- Computer vision (CNNs, object detection)

- Temporal modeling (RNNs, LSTMs)

- Reinforcement learning for planning

- Sensor data processing and fusion

- Deployment on edge devices (NVIDIA Jetson, automotive-grade GPUs)

- Portfolio Suggestions:

- Lane detection projects using CNNs

- Object detection using YOLO or SSD

- Simulation-based reinforcement learning for path planning

CuriosityTech Example : Students integrate multiple deep learning modules in CARLA, demonstrating full-stack autonomous vehicle pipelines, which is highly valued by automotive and AI companies.

7. Human Story

One learner at CuriosityTech implemented a pedestrian detection module in a simulated environment. Initially, the model misclassified shadows as pedestrians. After augmenting training data with varied lighting conditions, the model improved significantly. This taught the student the importance of dataset diversity, model evaluation, and iterative improvement, essential skills in real-world autonomous vehicle projects.

Conclusion

Self-driving cars exemplify the integration of multiple AI techniques—from CNNs for perception to RL for path planning. At Curiosity Tech, learners gain hands-on experience in simulated and modular pipelines, preparing them for careers in autonomous vehicles, robotics, and advanced AI engineering, while understanding the complexities and challenges of deploying safe, reliable AI systems.