Section 1 – The Multi-Cloud AI Landscape

Here’s a comparative landscape of what the big three offer:

| Category | AWS | Azure | GCP |

|---|---|---|---|

| Core ML Platform | SageMaker (training, deployment, pipelines) | Azure Machine Learning | Vertex AI (end-to-end ML) |

| Generative AI | Bedrock (foundation models via API) | Azure OpenAI Service | Vertex AI GenAI Studio + PaLM models |

| Data Services | Redshift ML, Glue ML | Synapse ML, Data Factory | BigQuery ML |

| Computer Vision | Rekognition | Computer Vision API | Vision AI |

| Speech & NLP | Transcribe, Comprehend | Speech Service, LUIS | Speech-to-Text, NLP APIs |

| AI Chips | Inferentia, Trainium | FPGA-based acceleration | TPUs (Tensor Processing Units) |

| AI Integration | Deep integration with IoT & analytics | Tight Microsoft ecosystem + Office AI | Native TensorFlow/Kube integration |

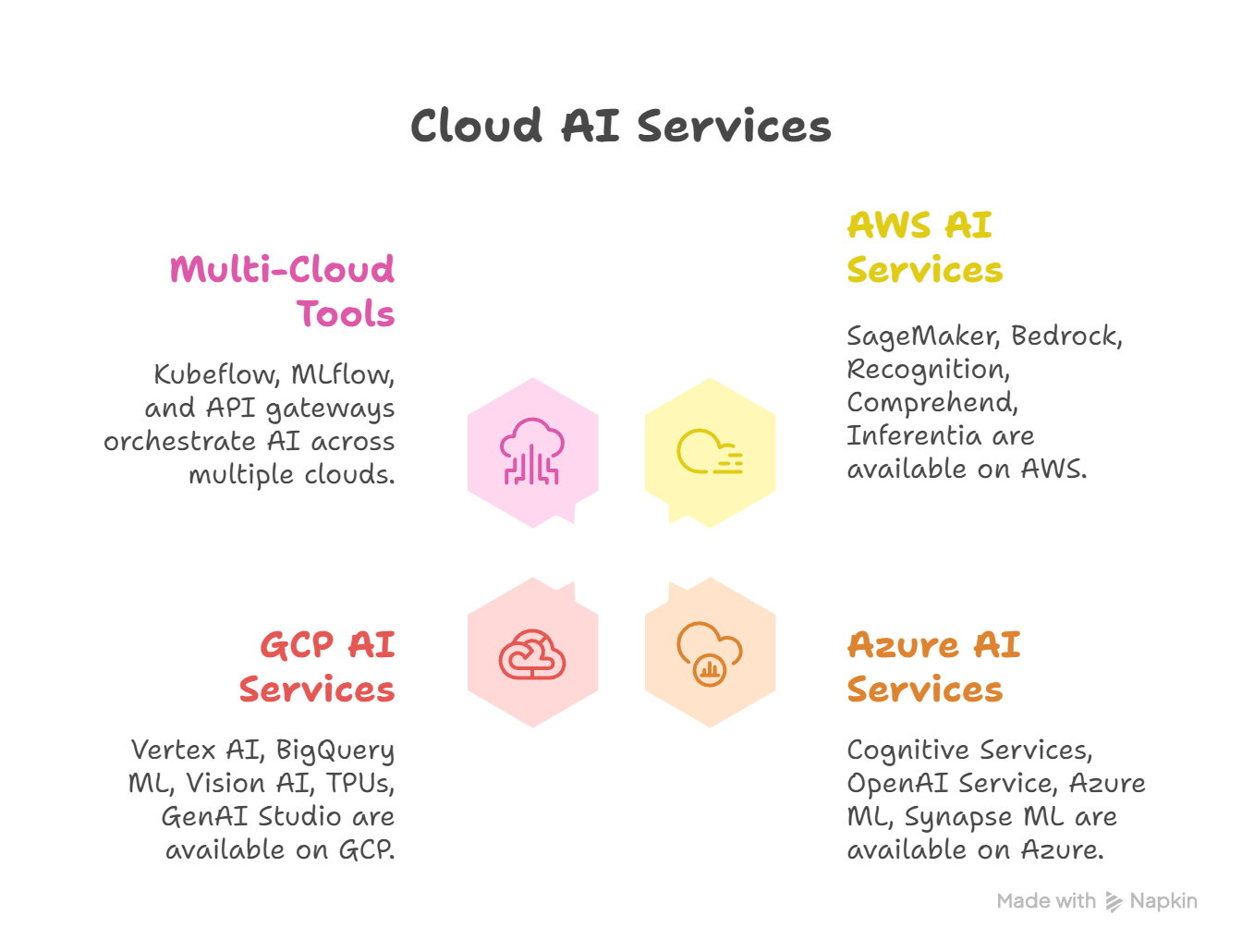

Section 2 – Infographic (AI Service Ecosystem Map)

Description of infographic (mind-map style):

- Center Node: Multi-Cloud AI Strategy

- AWS Branch: SageMaker, Bedrock, Rekognition, Comprehend, Inferentia

- Azure Branch: Cognitive Services, OpenAI Service, Azure ML, Synapse ML

- GCP Branch: Vertex AI, BigQuery ML, Vision AI, TPUs, GenAI Studio

- Connecting Nodes: Multi-cloud orchestration tools (Kubeflow, MLflow, API gateways)

This visualization shows that while each provider has unique strengths, the real power comes from connecting them via neutral orchestration frameworks.

Section 3 – Enterprise Use Cases in Multi-Cloud AI

Finance Sector – Fraud Detection

- AWS SageMaker builds fraud models using transaction datasets.

- GCP BigQuery ML analyzes billions of rows of transactional logs.

- Azure Cognitive Services adds anomaly detection to credit scoring systems.

- Result: Near real-time fraud detection across geographies.

Healthcare – Clinical Data Insights

- Azure OpenAI Service processes medical transcripts.

- AWS Comprehend Medical extracts drug names, dosages, conditions.

- GCP Vertex AI powers predictive models for patient readmission risk.

- Outcome: Cross-cloud AI leads to better patient outcomes and compliance.

Retail – Personalized Shopping

- AWS Personalize drives recommendations.

- Azure AI Search + ChatGPT powers intelligent product discovery.

- GCP Vision AI recognizes images from customer uploads.

- Outcome: Multi-cloud AI delivers seamless personalization at scale.

Section 4 – Abstraction: The AI Workflow Across Clouds

- Data Collection :– Retailer streams customer logs into AWS S3, Azure Blob, and GCP Storage.

- Data Processing :– Cleaning via Databricks (multi-cloud).

- Model Training :–

- SageMaker trains classification models.

- Vertex AI trains recommendation models.

- Azure ML fine-tunes LLMs.

- Deployment :– Exposed via API Gateway / Kubernetes clusters.

- Monitoring :– Cloud-neutral observability (Prometheus, Grafana, MLflow).

This abstraction shows AI is not cloud-exclusive but flows across providers.

Section 5 – Strategic Considerations

- Cost Optimization: Train heavy ML workloads on GCP TPUs → deploy light inference on AWS Inferentia.

- Compliance: Deploy AI in Azure for enterprises tied to Microsoft’s compliance stack (GDPR, HIPAA).

- Performance: Select providers based on region latency — GCP for APAC, AWS for US, Azure for EU.

- Vendor Neutrality: Use frameworks like Kubeflow, MLflow, and Hugging Face to remain cross-cloud.

Section 6 – Lessons from CuriosityTech Labs

In our Nagpur workshops at CuriosityTech, engineers practice:

- Training models in SageMaker

- Deploying them in Azure ML

- Serving predictions in Vertex AI

This real-world practice mirrors enterprise hybrid workflows, building confidence and cross-cloud agility.

Section 7 – How to Become a Multi-Cloud AI Expert

- Master fundamental ML frameworks: TensorFlow, PyTorch, Scikit-Learn.

- Learn provider-specific AI services (SageMaker, Azure ML, Vertex AI).

- Practice orchestration tools (Kubeflow, MLflow, Airflow).

- Build end-to-end projects (e.g., AI chatbot using AWS NLP + Azure OpenAI + GCP Vision).

- Follow case studies from CuriosityTech training labs where cross-cloud AI projects are replicated.

Conclusion

- AI and ML are the differentiators of modern enterprise success, but no single cloud provider dominates all use cases.

- Enterprises that combine the strengths of AWS, Azure, and GCP achieve resilience, compliance, and innovation beyond the scope of any single provider.

- At CuriosityTech.in, we champion this philosophy — training engineers to not just learn AI tools, but to architect multi-cloud AI ecosystems that are future-proof.