Introduction

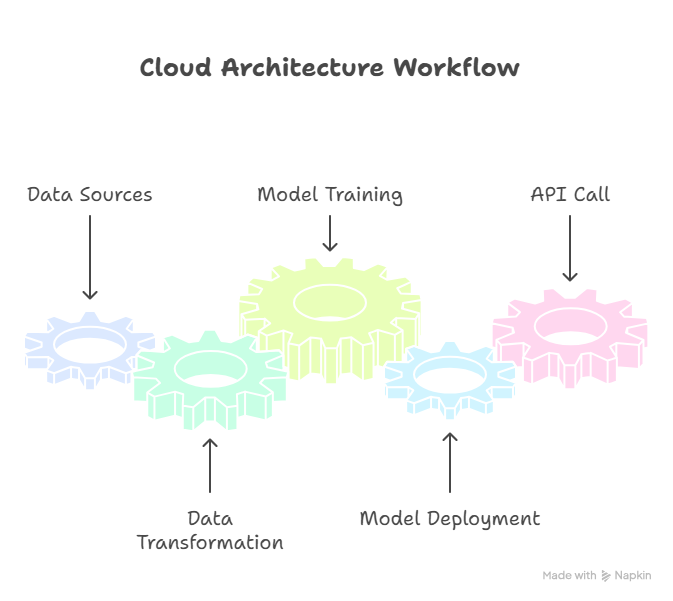

In 2025, cloud platforms have become indispensable for ML engineers. They provide scalable infrastructure, prebuilt ML services, automated workflows, and integration with data pipelines.

At curiositytech.in (Nagpur, Wardha Road, Gajanan Nagar), learners gain hands-on exposure to cloud ML platforms like AWS SageMaker, Azure ML, and Google Vertex AI, which enable end-to-end machine learning development—from model training to deployment and monitoring.

1. Why Use Cloud ML Platforms?

Benefits:

- Scalability: Handle large datasets and high computational workloads

- Managed Services: Prebuilt environments, model training, and deployment

- Automation: AutoML, hyperparameter tuning, CI/CD integration

- Monitoring & Logging: Built-in tools for production monitoring

- Collaboration: Multi-user access with role-based permissions

CuriosityTech Insight: Students at CuriosityTech learn that cloud ML platforms allow engineers to focus on model development rather than infrastructure management.

2. AWS SageMaker

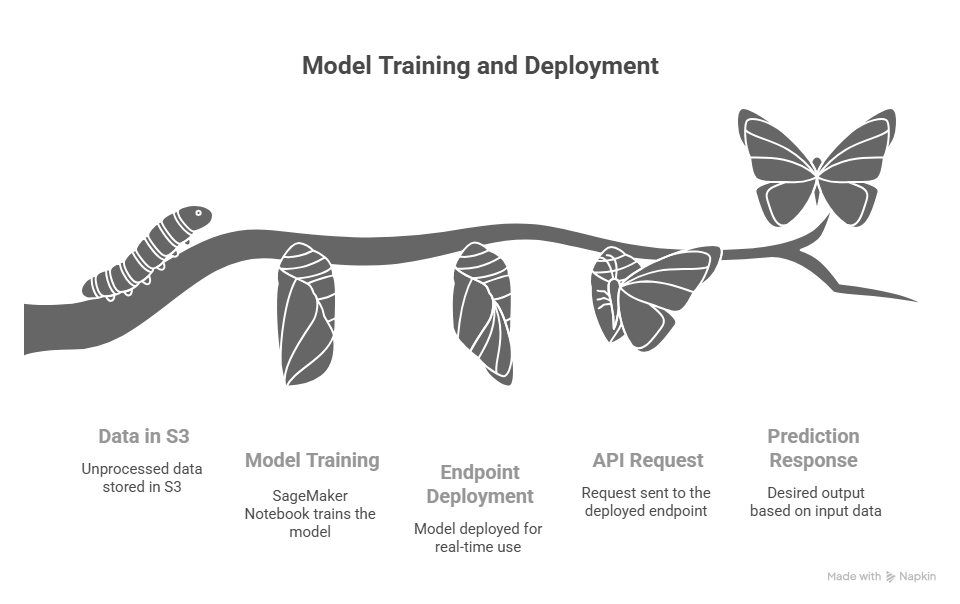

Overview: AWS SageMaker is a fully managed service that builds, trains, and deploys ML models at scale.

Key Features:

- Prebuilt Jupyter notebooks for experimentation

- Managed training clusters and distributed training

- Hyperparameter tuning jobs

- Endpoint deployment for real-time inference

- Monitoring with Amazon CloudWatch

Pros:

- Fully managed; reduces DevOps overhead

- Scales training with multiple GPU instances

- Built-in integration with AWS ecosystem

Cons:

- Cost can increase with large workloads

- Learning curve for AWS services

Scenario Storytelling: Riya at CuriosityTech.in trains a text classification model on SageMaker using S3 data storage, deploys an endpoint, and integrates it with a web app, achieving real-time predictions.

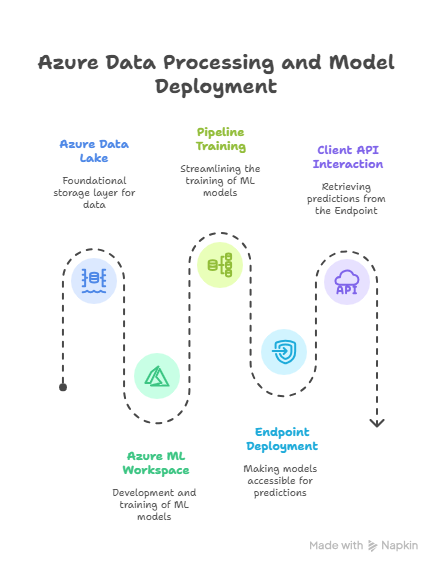

3. Azure Machine Learning

Overview: Azure ML is a cloud platform for building, training, deploying, and monitoring ML models.

Key Features:

- Designer for drag-and-drop ML pipelines

- Automated ML (AutoML) for rapid experimentation

- Managed endpoints for real-time and batch predictions

- Experiment tracking with Azure ML Studio

- Integration with Azure Data Lake and Databricks

Pros:

- User-friendly GUI for pipeline creation

- Strong integration with enterprise tools

- AutoML simplifies model selection and tuning

Cons:

- Limited flexibility for highly customized workflows

- Pricing model can be complex

Scenario Storytelling: Arjun creates a fraud detection ML pipeline on Azure ML, using AutoML to test multiple algorithms. The model is deployed as a REST API and monitored via Azure dashboards.

4. Google Vertex AI

Overview: Vertex AI is Google Cloud’s ML platform for end-to-end ML workflows.

Key Features:

- Unified interface for datasets, models, and experiments

- AutoML for image, text, and tabular data

- Feature Store for managing features across projects

- Managed endpoints for batch and online prediction

- Monitoring and logging integrated with Google Cloud Monitoring

Pros:

- Strong integration with Google Cloud ecosystem

- Feature Store enables reuse of engineered features

- High-performance training with TPUs

Cons:

- Learning curve for Google Cloud tools

- Pricing may be high for large-scale training

Scenario Storytelling: Riya deploys an image recognition model on Vertex AI, utilizing the Feature Store to reuse features across multiple projects, saving time and reducing errors.

5. Comparison Table

| Feature | AWS SageMaker | Azure ML | Google Vertex AI |

| Managed Notebooks | Yes | Yes | Yes |

| AutoML | Limited | Yes | Yes |

| Real-time API | Yes | Yes | Yes |

| Batch Prediction | Yes | Yes | Yes |

| Hyperparameter Tuning | Yes | Yes | Yes |

| Feature Store | No | Limited | Yes |

| Integration | AWS ecosystem | Azure ecosystem | Google Cloud ecosystem |

| Cost | Moderate-High | Moderate | Moderate-High |

CuriosityTech Insight: Learners experiment with all three platforms, gaining the ability to choose the right cloud ML platform for different business scenarios.

6. Best Practices for Cloud ML Deployment

- Use Managed Services: Leverage cloud notebooks, pipelines, and endpoints

- Monitor Models: Use built-in tools to track drift, latency, and throughput

- Optimize Costs: Choose instance types and schedule training efficiently

- Version Control: Track datasets, models, and pipeline versions

- Security & Compliance: Ensure encrypted data storage and controlled access

Scenario: Arjun deploys a recommendation engine on AWS SageMaker, monitors latency using CloudWatch, and sets auto-scaling policies to handle peak traffic.

7. Real-World Applications

| Industry | Cloud ML Platform Use Case |

| Healthcare | Image segmentation and diagnosis (Vertex AI) |

| Finance | Fraud detection (Azure ML with AutoML) |

| Retail | Product recommendation (AWS SageMaker endpoints) |

| NLP | Sentiment analysis on social media (AWS or Vertex AI) |

| IoT | Predictive maintenance (Azure ML pipelines) |

curiositytech.in emphasizes practical cloud ML training, where students deploy models end-to-end on cloud platforms, integrating data pipelines, training, deployment, and monitoring.

8. Key Takeaways

- Cloud ML platforms simplify scalable and production-ready ML deployment

- AWS SageMaker, Azure ML, and Vertex AI each have unique strengths and limitations

- Automation, monitoring, and cost optimization are mandatory consideration

- Hands-on cloud projects are critical to develop industry-ready ML engineers

Conclusion

Mastering cloud ML platforms in 2025 is essential for ML engineers. By leveraging AWS SageMaker, Azure ML, or Google Vertex AI, engineers can:

- Build end-to-end ML workflows

- Deploy and scale models efficiently

- Monitor model performance and maintain production systems

curiositytech.in provides hands-on training and real-world projects on all major cloud ML platforms. Contact +91-9860555369 or contact@curiositytech.in to start cloud ML mastery.