Introduction

In 2025, cloud platforms are essential for modern data science, providing scalable compute power, storage, and advanced AI/ML services. Instead of relying solely on local machines, data scientists leverage AWS, Azure, and GCP to process massive datasets, deploy models, and integrate AI pipelines seamlessly.

At curiosity tech, Nagpur (1st Floor, Plot No 81, Wardha Rd, Gajanan Nagar), learners gain hands-on experience with all major cloud platforms, ensuring they can build, deploy, and scale data science projects in real-world scenarios.

This blog explores features, comparisons, workflows, and practical tutorials for AWS, Azure, and GCP, giving learners a complete roadmap for cloud-based data science.

Section 1 – Why Cloud Platforms for Data Science?

Benefits:

- Scalability: Handle datasets of any size, dynamically increasing resources

- Cost Efficiency: Pay-as-you-go model reduces upfront hardware costs

- Collaboration: Teams can share projects and notebooks seamlessly

- Advanced AI/ML Services: Pre-built AI models, GPU/TPU acceleration, and managed ML pipelines

- Security & Compliance: Built-in encryption, authentication, and regulatory compliance

CuriosityTech Story:- Learners processed 10 TB of e-commerce data on AWS S3 and trained ML models on GPU instances, reducing training time from 24 hours to 2 hours, demonstrating real-world cloud efficiency.

Section 2 – Overview of Cloud Platforms

| Feature | AWS | Azure | GCP |

| Compute Services | EC2, Lambda, SageMaker | Virtual Machines, ML Studio | Compute Engine, AI Platform |

| Storage | S3, EBS, Glacier | Blob Storage, Data Lake | Cloud Storage, BigQuery |

| ML Services | SageMaker, Comprehend, Rekognition | Azure ML, Cognitive Services | AI Platform, AutoML |

| Big Data | EMR, Redshift | HDInsight, Synapse Analytics | Dataproc, BigQuery |

| Cost Model | Pay-as-you-go, Reserved Instances | Pay-as-you-go, Reserved VMs | Pay-as-you-go, Sustained Use Discounts |

| Global Availability | 26+ regions | 60+ regions | 35+ regions |

CuriosityTech Insight: Learners compare pricing, GPU availability, and ML services to select the most suitable cloud platform for their project needs.

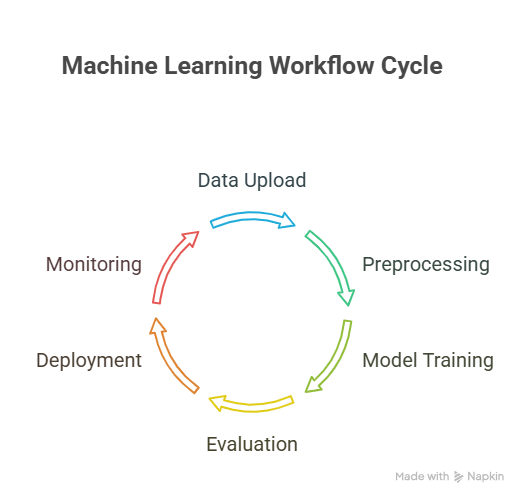

Section 3 – Practical Workflow on Cloud Platforms

1 – Data Storage

- AWS: Upload CSVs/JSONs to S3 bucket

- Azure: Use Blob Storage or Data Lake

- GCP: Use Cloud Storage or BigQuery for structured datasets

2 – Data Preprocessing

- Launch Jupyter Notebook/VM on cloud

- Install Python libraries: pandas, numpy, scikit-learn, tensorflow

- Load data from cloud storage and clean/analyze it

3 – Model Training

- AWS SageMaker: Managed training with GPU acceleration

- Azure ML Studio: Drag-and-drop or Python SDK

- GCP AI Platform: Use TensorFlow, PyTorch, or AutoML

4 – Model Evaluation

- Evaluate metrics like accuracy, RMSE, F1 Score using cloud notebooks

- Use cloud logging services to track experiments and model versions

5 – Deployment

- AWS: SageMaker Endpoint or Lambda function

- Azure: Deploy ML model as web service/API

- GCP: AI Platform Prediction or Cloud Functions

Section 4 – Hands-On Tutorial Example: Predicting Customer Churn on AWS

import boto3

import pandas as pd

from sagemaker import Session

from sagemaker.sklearn.estimator import SKLearn

# Initialize S3 session

s3 = boto3.client(‘s3’)

bucket_name = “curiositytech-churn-data”

s3.upload_file(“customer_data.csv”, bucket_name, “customer_data.csv”)

# SageMaker Session

sess = Session()

# SKLearn estimator for churn prediction

sklearn_estimator = SKLearn(

entry_point=’train.py’,

role=’SageMakerRole’,

instance_type=’ml.m5.large’,

framework_version=’0.23-1′

)

sklearn_estimator.fit({‘train’: f’s3://{bucket_name}/customer_data.csv’})

Outcome: Learners deploy models end-to-end on cloud, leveraging managed services for scalability, monitoring, and secure storage.

Section 5 – Tips for Cloud Data Science Mastery

- Start with small datasets and gradually scale to large datasets

- Explore GPU/TPU acceleration for deep learning

- Use cloud-native ML services like SageMaker, Azure ML, or AutoML

- Understand cost monitoring to avoid unnecessary bills

- Document cloud workflows for reproducibility and team collaboration

CuriosityTech Story: Learners processed IoT sensor data using GCP BigQuery and deployed predictive maintenance models using AI Platform, demonstrating real-time insights at scale.

Section 6 – Cloud Comparison for Beginners

| Aspect | AWS | Azure | GCP |

| Ease of Learning | Medium, extensive docs | Beginner-friendly ML Studio | Beginner-friendly AutoML |

| GPU/TPU Support | GPU available | GPU available | GPU & TPU available |

| Managed ML Services | SageMaker, Comprehend | Azure ML, Cognitive Services | AutoML, AI Platform |

| Integration | Works well with all enterprise apps | Strong MS ecosystem | Strong with Python, Big Data |

Insight: Beginners can start with GCP AutoML or Azure ML Studio, then scale to AWS SageMaker for production.

Conclusion

Cloud platforms empower data scientists to process massive datasets, train complex ML models, and deploy AI solutions at scale. Mastering AWS, Azure, and GCP gives a competitive advantage in 2025’s data-driven industry.

At curiosity tech Nagpur, learners receive hands-on cloud training, real-world project experience, and expert guidance, preparing them for high-demand data science roles. Contact +91-9860555369, contact@curiositytech.in, and follow Instagram: CuriosityTechPark or LinkedIn: Curiosity Tech for more resources.