Introduction

Robotics and AI are converging to create intelligent automation systems capable of performing complex tasks without human intervention. Deep learning is the backbone of modern robotics, enabling perception, decision-making, and adaptive control.

At curiosity tech learners in Nagpur gain hands-on exposure to robotics simulations, sensor integration, and deep learning models, preparing them for careers in autonomous robotics, industrial automation, and AI-driven robotics solutions.

1. Role of Deep Learning in Robotics

Deep learning enables robots to:

- Perceive the environment via cameras, LIDAR, or ultrasonic sensors

- Understand and interpret complex data using CNNs, RNNs, and transformers

- Plan and execute tasks using reinforcement learning and control algorithms

CuriosityTech Insight: Students learn that robotics is not just about hardware; software intelligence through deep learning is equally critical.

2. Core Applications of Deep Learning in Robotics

a) Computer Vision for Robots

- Enables object detection, recognition, and localization

- Techniques: CNNs for image recognition, YOLO/Faster R-CNN for real-time object detection

- Applications: Industrial pick-and-place robots, warehouse automation, autonomous navigation

b) Motion Planning & Control

- Reinforcement learning helps robots learn optimal movement policies

- Example: Robotic arm learning to grasp objects via trial-and-error in simulation

- Techniques: Deep Q-Learning, Policy Gradients, Proximal Policy Optimization (PPO)

c) Natural Language Understanding

- Robots can interpret human commands using NLP models

- Conversational interfaces: GPT-based systems or transformer-based command parsing

- Applications: Service robots, customer assistance, home automation

d) Sensor Fusion

- Combine visual, auditory, and tactile sensor data

- Enables robots to operate in dynamic and uncertain environments

- Example: Autonomous drones integrating camera + LIDAR for obstacle avoidance

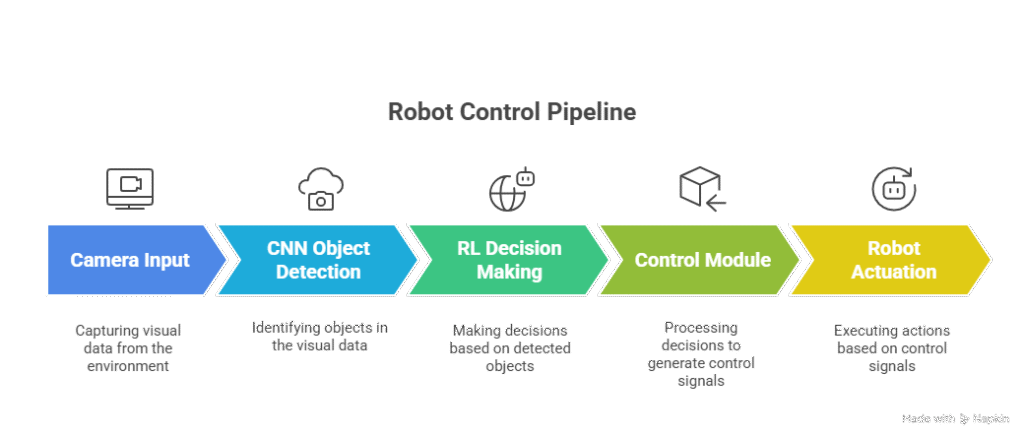

3. Hands-On Example: Robotic Arm with Deep Learning

- Simulation Platform: Use PyBullet or ROS (Robot Operating System)

- Perception Module: CNN identifies objects on a table

- Decision Module: Reinforcement learning determines optimal grasp strategy

- Control Module: PID or RL policy drives arm actuators to pick and place objects

Observation: CuriosityTech students observe real-time learning in simulation and understand the importance of iterative refinement and reward shaping in reinforcement learning.

4. Industrial & Real-World Applications

| Application | Description | Deep Learning Component |

| Autonomous Drones | Navigation in complex terrain | CNN + RL + Sensor Fusion |

| Industrial Automation | Quality inspection and assembly | CNN for defect detection |

| Healthcare Robotics | Surgery assistance, rehabilitation | CNN + LSTM for movement prediction |

| Service Robots | Customer assistance, hospitality | NLP + Vision + Motion Planning |

CuriosityTech Example: Learners build pick-and-place robotic arms integrated with vision-based object detection pipelines, preparing them for industrial robotics roles.

5. Challenges in Robotics & AI

- Data Efficiency: Training RL models requires extensive simulation or real-world data

- Real-Time Constraints: Robots need low-latency perception and control

- Safety & Reliability: Critical for human-robot interaction

- Transfer Learning: Sim-to-real gap requires adaptation strategies

CuriosityTech Tip: Students use domain randomization and simulation-based training to reduce sim-to-real gaps and improve real-world performance.

6. Human Story

A CuriosityTech learner developed a warehouse automation robot:

- Initially struggled with object misidentification under variable lighting

- Implemented data augmentation and CNN fine-tuning, which improved detection accuracy

- Deployed reinforcement learning policies in simulation before transferring to a physical robotic arm

This experience emphasized the integration of perception, decision-making, and control in robotics—core skills for AI-driven automation careers.

7. Career Pathways in Robotics & AI

- Key Skills:

- Deep learning for perception (CNNs, transformers)

- Reinforcement learning for decision-making

- ROS, simulation platforms, and sensor integration

- Edge deployment and real-time control systems

- Portfolio Projects:

- Pick-and-place robotic arm with vision-based guidance

- Autonomous drone navigation with sensor fusion

- Conversational service robot with NLP and vision integration

CuriosityTech Insight: Students who showcase full-stack robotics projects gain an edge in roles such as Robotics Engineer, AI Engineer, Autonomous Systems Developer, and Industrial Automation Specialist.

Conclusion

Deep learning is revolutionizing robotics, enabling autonomous, intelligent, and adaptive systems. At curiosity tech learners gain hands-on experience in perception, planning, control, and deployment, preparing them for high-demand careers in AI-powered robotics, industrial automation, and next-generation autonomous systems.