Introduction

In 2025, Transfer Learning has become a cornerstone technique in machine learning, enabling engineers to leverage existing pretrained models for new tasks, significantly reducing training time and computational cost.

At curiositytech.in (Nagpur, Wardha Road, Gajanan Nagar), learners explore transfer learning in computer vision, NLP, and other domains, understanding both the theoretical foundations and practical hands-on workflows for production-ready ML systems.

1. What is Transfer Learning?

Definition: Transfer learning is a technique where a model trained on one task is adapted to a different but related task. Instead of training a model from scratch, engineers can reuse learned features, improving efficiency and performance.

Key Benefits:

- Reduces training time

- Requires fewer labeled samples

- Leverages knowledge from large-scale datasets

- Enables rapid prototyping for new tasks

Scenario Storytelling: Riya at curiositytech.in fine-tunes a pretrained ResNet50 model for a custom medical imaging dataset, achieving high accuracy without training from scratch.

2. When to Use Transfer Learning

- Limited labeled data: Small datasets cannot support training from scratch

- High computational cost: Pretrained models reduce GPU hours

- Similar domain: Target task is related to source task

- Rapid prototyping: Quick deployment of models for testing

3. Types of Transfer Learning

| Type | Description | Example |

| Feature Extraction | Use pretrained model layers as fixed feature extractors | Image embeddings from ResNet for classification |

| Fine-Tuning | Update some or all layers of the pretrained model on the new dataset | Retraining last few layers of BERT for sentiment analysis |

| Domain Adaptation | Adapt model to a new but related domain | Adapting a general speech recognition model to medical domain audio |

| Multi-Task Learning | Train a model to perform multiple related tasks simultaneously | Simultaneous object detection and segmentation |

CuriosityTech Insight: Beginners often start with feature extraction and gradually move to fine-tuning for better performance and domain adaptation.

4. Popular Pretrained Models

Computer Vision:

- ResNet, VGG, Inception, EfficientNet

- Applications: Image classification, object detection, segmentation

Natural Language Processing:

- BERT, GPT, RoBERTa, DistilBERT

- Applications: Sentiment analysis, text classification, question-answering

Audio / Speech:

- Wav2Vec, DeepSpeech

- Applications: Speech recognition, audio classification

Scenario Storytelling: Arjun at CuriosityTech Park uses BERT for sentiment analysis on customer reviews, fine-tuning it on a smaller, domain-specific dataset, achieving production-grade performance in days instead of weeks.

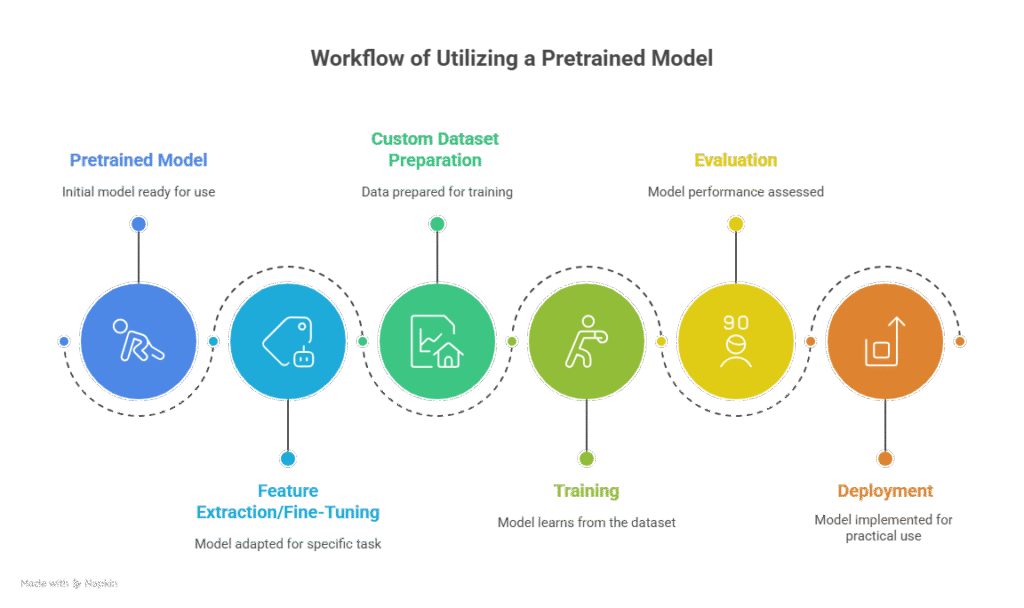

5. Transfer Learning Workflow

Stepwise Workflow:

- Select Pretrained Model: Choose architecture suitable for task

- Load Pretrained Weights: Leverage models from PyTorch, TensorFlow, Hugging Face

- Freeze Layers (Optional): Prevent early layers from updating to retain generic features

- Add Task-Specific Layers: Add classification or regression heads

- Train on New Dataset: Use small learning rates for fine-tuning

- Evaluate Model: Metrics like accuracy, F1-score, ROC-AUC depending on task

- Deploy: Serve via Flask, FastAPI, or cloud ML platforms

6. Hands-On Example: Image Classification with ResNet50

import torch

import torch.nn as nn

from torchvision import models, transforms, datasets

# Load pretrained ResNet50

model = models.resnet50(pretrained=True)

# Freeze layers for feature extraction

for param in model.parameters():

param.requires_grad = False

# Replace final layer for new classification task (e.g., 3 classes)

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs, 3)

# Define loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.fc.parameters(), lr=1e-4)

# Dataset & DataLoader setup

train_dataset = datasets.ImageFolder(‘data/train’, transform=transforms.ToTensor())

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=32, shuffle=True)

# Training loop (simplified)

for epoch in range(5):

for inputs, labels in train_loader:

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

print(“Model fine-tuned successfully!”)

CuriosityTech Insight: Students fine-tune pretrained models in less than a day, enabling rapid deployment and experimentation.

7. Evaluation Metrics

| Task | Metric | Description |

| Classification | Accuracy, F1-score | Measure prediction correctness |

| Object Detection | mAP (mean Average Precision) | Measures detection performance |

| NLP | BLEU, ROUGE | Evaluates language generation or summarization |

| Regression | RMSE, MAE | Error in predicted numerical values |

Scenario: Riya evaluates the fine-tuned ResNet50 on a holdout test set, achieving 95% accuracy on a small medical image classification dataset.

8. Real-World Applications

| Domain | Application | Pretrained Model Example |

| Computer Vision | Medical image classification | ResNet50, EfficientNet |

| NLP | Sentiment analysis | BERT, RoBERTa |

| Speech | Voice commands recognition | Wav2Vec |

| Robotics | Perception for navigation | YOLO, EfficientDet |

| E-commerce | Product recommendation images | VGG, Inception |

At CuriosityTech.in, learners build real-world solutions using pretrained models, preparing them to integrate transfer learning into production ML pipelines.

9. Best Practices

- Start with Feature Extraction: Avoid overfitting on small datasets

- Use Small Learning Rates: Fine-tuning requires careful adjustment

- Regularization: Use dropout, data augmentation to prevent overfitting

- Monitor Training: Track validation metrics closely

- Leverage Cloud GPUs: For faster fine-tuning and experimentation

10. Key Takeaways

- Transfer learning reduces training time and improves performance for small or domain-specific datasets

- Feature extraction and fine-tuning are mandatory skills for ML engineers in 2025

- Pretrained models span vision, NLP, and speech, enabling versatile applications

- Hands-on experience is essential for deploying real-world ML systems efficiently

Conclusion

Transfer learning empowers ML engineers to adapt pretrained models for new tasks, saving time, cost, and effort while achieving high performance. Mastery involves understanding:

- Theory of transfer learning

- Selection and adaptation of pretrained models

- Fine-tuning workflows and evaluation

- Deployment strategies for production-ready ML

curiositytech.in provides practical workshops and projects on transfer learning, helping learners build end-to-end ML solutions efficiently.

Contact +91-9860555369 or contact@curiositytech.in to get hands-on experience with pretrained models and transfer learning workflows.