Introduction

Every skyscraper rests on a solid foundation, and in the same way, deep learning rests on neural networks. If you understand neural networks deeply, every other concept—CNNs, RNNs, Transformers—becomes far easier.

At CuriosityTech.in (Nagpur), where learners build their first AI projects before scaling to industry-level applications, the journey always begins with Neural Networks 101. In fact, in our labs at Wardha Rd, Gajanan Nagar, beginners start by building a simple handwritten digit classifier (MNIST dataset) — their first glimpse of how machines “think.”

1. What is a Neural Network?

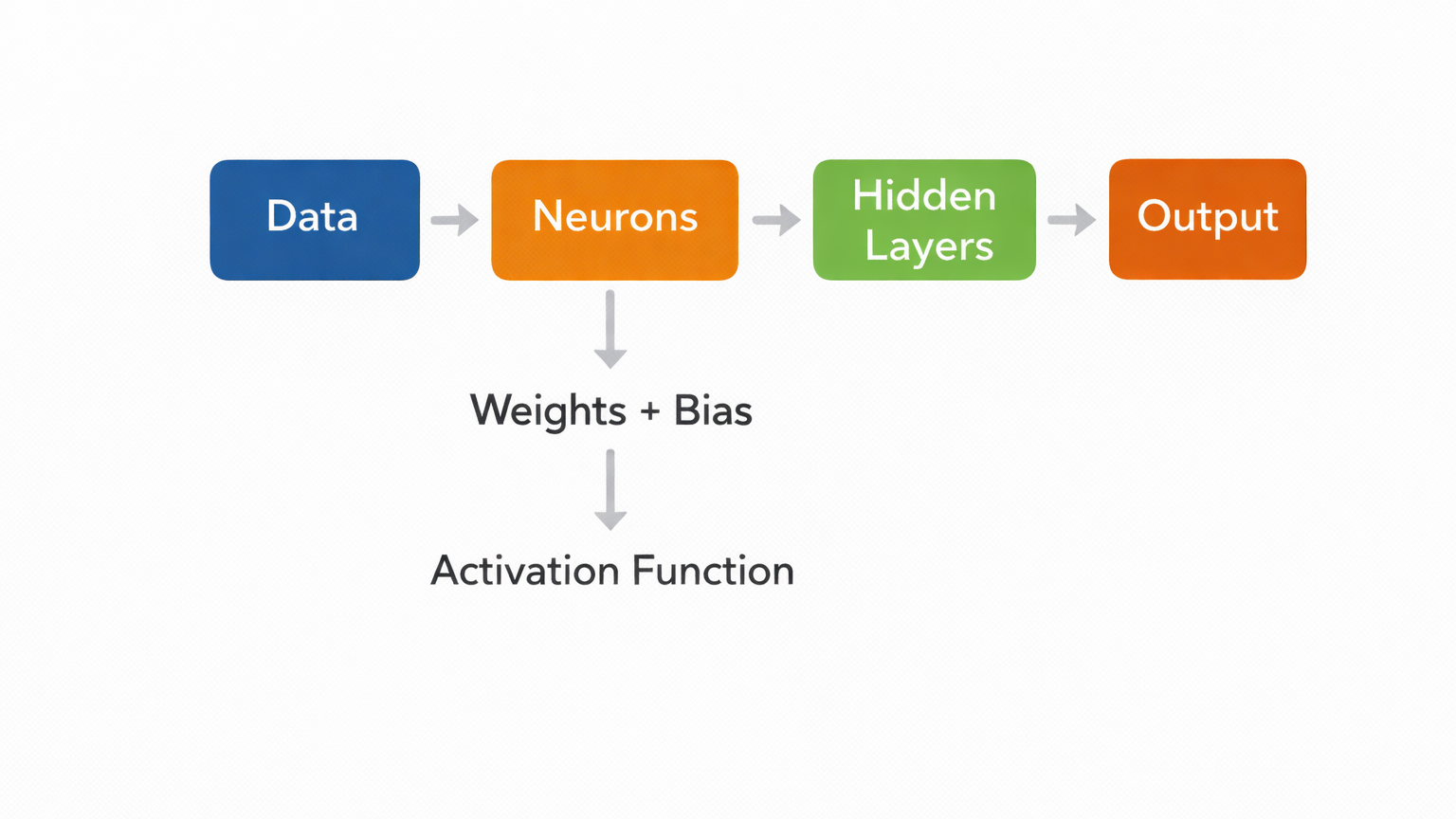

At its core, a neural network is a mathematical function inspired by the human brain. It takes inputs, processes them through hidden layers, and produces an output.

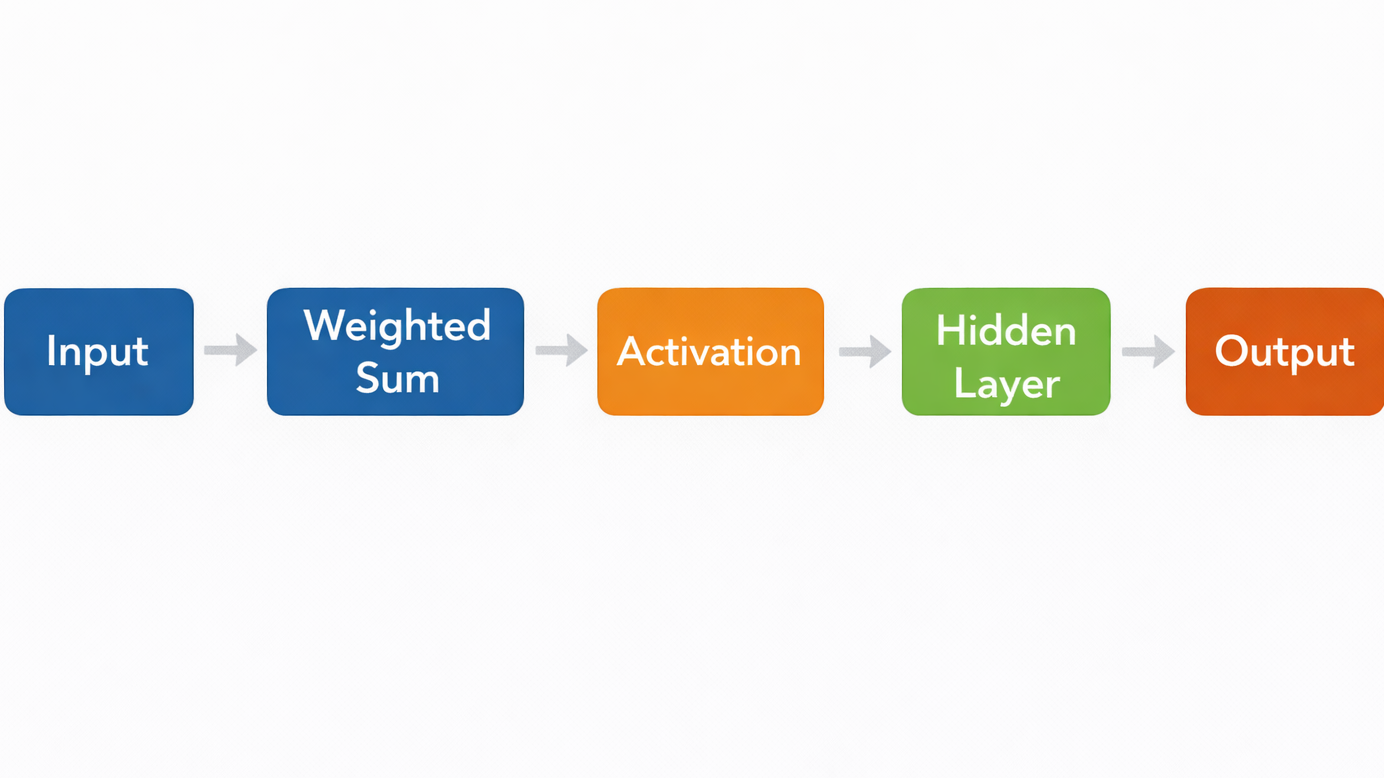

Think of it like a factory assembly line:

2. The Anatomy of a Neural Network

Let’s break it into components:

| Component | Description | Analogy |

| Neuron | The basic unit that processes data. | Worker in a factory. |

| Input Layer | First layer where raw data enters. | Truck unloading raw materials. |

| Hidden Layers | Intermediate layers that transform data. | Assembly line stations. |

| Weights | Parameters that decide importance of each input. | Worker’s attention on specific parts. |

| Bias | Shifts the output to fit better. | Extra resource to fine-tune production. |

| Activation Function | Decides if the neuron “fires” or not. | Quality check gate at each station. |

| Output Layer | Final result of the network. | Finished product leaving the factory. |

3. How Information Flows (Forward Propagation)

- Input data is multiplied by weights.

- A bias is added.

- The sum passes through an activation function (ReLU, Sigmoid, etc.).

- The output flows to the next layer.

- The process repeats until the output layer predicts a result.

Visual Flow (Text Diagram):

4. Learning in Neural Networks (Backpropagation)

Neural networks learn by trial and error:

- They make a prediction.

- Compare it with the correct answer using a loss function.

- Adjust weights using gradient descent.

This cycle repeats thousands (or millions) of times until accuracy improves.

5. Historical Evolution of Neural Networks

- 1943: McCulloch & Pitts introduce the first artificial neuron.

- 1958: Frank Rosenblatt develops the Perceptron.

- 1986: Backpropagation algorithm makes multi-layer training possible.

- 2012: ImageNet competition — CNNs outperform humans in image classification.

- 2020–2025: Transformers & LLMs (like ChatGPT) dominate NLP and multimodal AI.

6. Simple Example (Code Snippet)

To make this real, here’s a beginner-friendly TensorFlow snippet (often the very first project for learners at CuriosityTech labs):

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Neural network for binary classification

model = Sequential([

Dense(8, input_dim=4, activation=’relu’),

Dense(4, activation=’relu’),

Dense(1, activation=’sigmoid’)

])

model.compile(optimizer=’adam’, loss=’binary_crossentropy’, metrics=[‘accuracy’])

This simple 3-layer network learns patterns from data.

7. Why Neural Networks Matter in Careers

For AI Engineers and Deep Learning Specialists, neural networks are:

- The entry point to advanced architectures.

- The most discussed topic in interviews

- The foundation of research papers and projects.

At CuriosityTech.in, students practice from small-scale networks like MNIST digit recognition to large-scale projects like image classifiers, speech recognition, and text summarizers.

8. Neural Networks vs Human Brain

| Aspect | Human Brain | Artificial Neural Network |

| Neurons Count | ~86 billion neurons | Thousands to billions (depending on architecture) |

| Processing Speed | Parallel, energy-efficient | Parallel, but hardware-intensive (GPUs/TPUs) |

| Learning | Through experience and association | Through data and optimization algorithms |

| Adaptability | Extremely high | Limited to trained tasks |

9. Infographic (Textual Representation)

10. Career Path (Neural Networks to Expertise)

Step 1 – Learn Neural Network Basics

- Study perceptrons, forward/backward propagation.

Step 2 – Practice with Datasets

- MNIST, CIFAR-10, IMDB sentiment analysis.

Step 3 – Master Frameworks

- TensorFlow, PyTorch (hands-on at CuriosityTech Nagpur).

Step 4 – Dive into Architectures

- CNNs (images), RNNs (sequences), Transformers (text).

Step 5 – Contribute to Real Projects

- Healthcare imaging, finance fraud detection, chatbot systems.

11. Human Touch

A student once asked during a CuriosityTech session on Instagram :- “How do I know I’m really learning and not just copying tutorials?”

The answer is simple: when you can explain a neural network to a 10-year-old without code, you’ve mastered it.

Conclusion

Neural networks are the foundation of deep learning careers. Without mastering them, it’s impossible to scale to CNNs, NLP models, or LLMs. For anyone serious about AI in 2025, understanding neural networks isn’t optional — it’s mandatory.

With structured learning, guided mentorship, and practical projects — the kind provided at CuriosityTech.in (contact@curiositytech.in, +91-9860555369) — you can transform from a curious beginner into a confident AI Engineer.