Introduction

While CNNs excel at understanding images, Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs) are designed for sequential data. This includes text, speech, stock prices, and time series data.

At CuriosityTech.in, learners explore RNNs and LSTMs through projects like sentiment analysis, stock prediction, and chatbot creation. By handling sequential patterns, students gain insight into how AI models “remember” past information to make better predictions.

1. What is an RNN?

A Recurrent Neural Network is a neural network with loops, allowing it to maintain state information. Unlike traditional feedforward networks, RNNs can remember previous inputs, making them suitable for sequences.

Analogy: Think of reading a book: understanding the current sentence depends on remembering previous sentences.

Structure Diagram (Text Representation):

x1 → [RNN Cell] → h1 → y1

x2 → [RNN Cell] → h2 → y2

x3 → [RNN Cell] → h3 → y3

…

- x = input at each time step

- h = hidden state (memory)

- y = output

2. Challenges of Standard RNNs

- Vanishing Gradient: Gradients shrink during backpropagation, making it hard to learn long-term dependencies.

- Exploding Gradient: Gradients grow excessively, causing instability.

- Limited ability to capture long-term dependencies in sequences.

These limitations motivated the development of LSTMs.

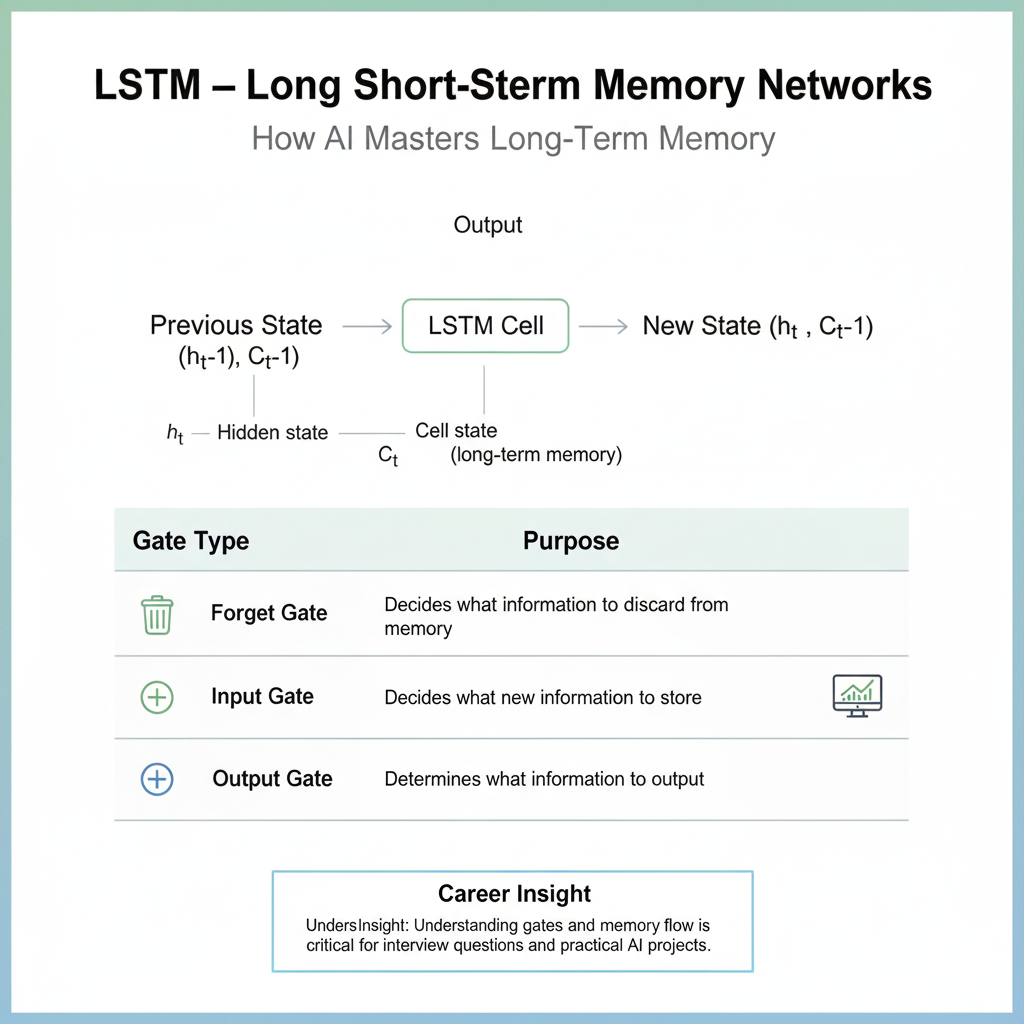

3. LSTM – Long Short-Term Memory Networks

LSTMs are a type of RNN designed to retain long-term memory using gates:

| Gate Type | Purpose |

| Forget Gate | Decides what information to discard from memory |

| Input Gate | Decides what new information to store |

| Output Gate | Determines what information to output |

Textual Diagram:

Previous State (h_t-1, C_t-1) → LSTM Cell → New State (h_t, C_t) → Output

- h_t: Hidden state

- C_t: Cell state (long-term memory)

Career Insight: Understanding gates and memory flow is critical for interview questions and practical AI projects.

4. Applications of RNNs & LSTMs

- Natural Language Processing:

- Sentiment analysis

- Language translation

- Chatbots

- Sentiment analysis

- Time Series Prediction:

- Stock price forecasting

- Weather prediction

- Stock price forecasting

- Speech Recognition:

- Voice assistants like Alexa or Google Assistant

- Voice assistants like Alexa or Google Assistant

Example at CuriosityTech: Students built an RNN-based sentiment analysis model using Twitter data. LSTMs allowed the model to understand context over longer sentences, improving accuracy from 70% to 88%.

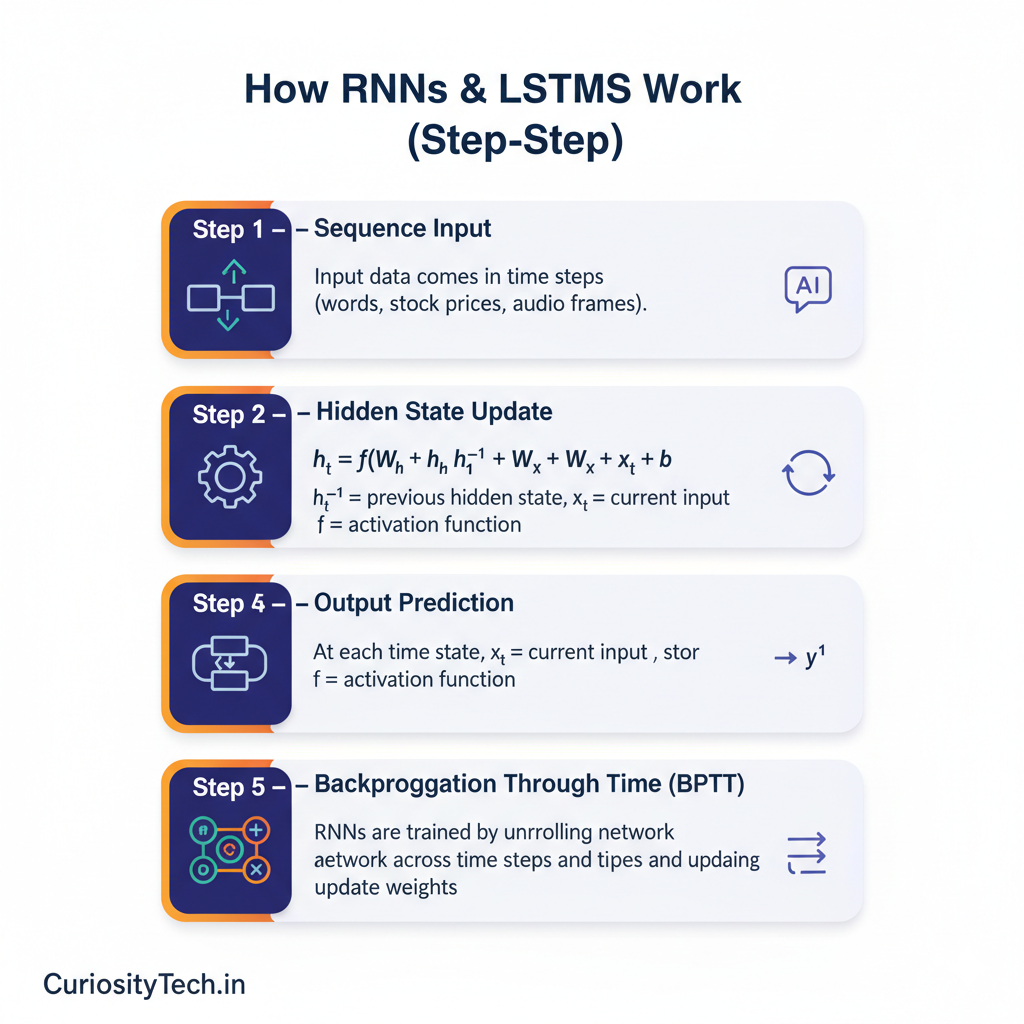

5. How RNNs & LSTMs Work (Step-by-Step)

Step 1 – Sequence Input: Input data comes in time steps (words, stock prices, audio frames).

Step 2 – Hidden State Update:

h_t = f(W_h * h_t-1 + W_x * x_t + b)

Where:

- h_t-1 = previous hidden state

- x_t = current input

- f = activation function

Step 3 – Output Prediction: At each time step, the network predicts y_t.

Step 4 – Backpropagation Through Time (BPTT): RNNs are trained by unrolling the network across time steps and updating weights.

Step 5 – LSTM Gates: Forget, input, and output gates regulate memory flow, allowing long-term dependency learning.

6. Practical Example

Python + Keras (CuriosityTech Labs Example):

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense, Embedding

model = Sequential([

Embedding(input_dim=5000, output_dim=64),

LSTM(128, return_sequences=False),

Dense(1, activation=’sigmoid’)

])

model.compile(optimizer=’adam’, loss=’binary_crossentropy’, metrics=[‘accuracy’])

Explanation:

- Embedding: Converts words into vectors

- LSTM layer: Learns sequential patterns

- Dense output: Sigmoid for binary sentiment classification

Career Tip: Hands-on projects like this demonstrate both sequence modeling and practical deep learning skills to employers.

7. Comparison Table: RNN vs LSTM

| Feature | RNN | LSTM |

| Memory | Short-term | Long-term |

| Gates | None | Forget, Input, Output |

| Vanishing Gradient | Likely | Mitigated |

| Use Cases | Short sequences | Long sequences, NLP, time series |

| Complexity | Low | Higher |

8. Human Story

During a CuriosityTech workshop, a student working on stock price prediction struggled with standard RNNs because the network forgot trends from earlier days. After switching to LSTMs, the model could capture weekly and monthly patterns, boosting accuracy dramatically.

This story highlights why understanding sequential modeling deeply is essential for career-ready AI engineers.

Conclusion

RNNs and LSTMs are essential tools for sequential data processing. Mastering these models allows beginners to tackle NLP, time series, and speech recognition tasks. At CuriosityTech.in, learners gain hands-on experience, ensuring they not only understand the theory but can build deployable AI projects that impress recruiters and clients alike.