Introduction

Machine vision and image processing are at the heart of intelligent robotics. They allow robots to perceive, interpret, and interact with their environment visually. From autonomous vehicles detecting pedestrians to industrial robots inspecting products, machine vision provides the “eyes” for modern robots.

At curiositytech.in, learners can access detailed tutorials, hands-on projects, and visual examples to bridge the gap between theory and real-world robotic vision applications.

1. What is Machine Vision?

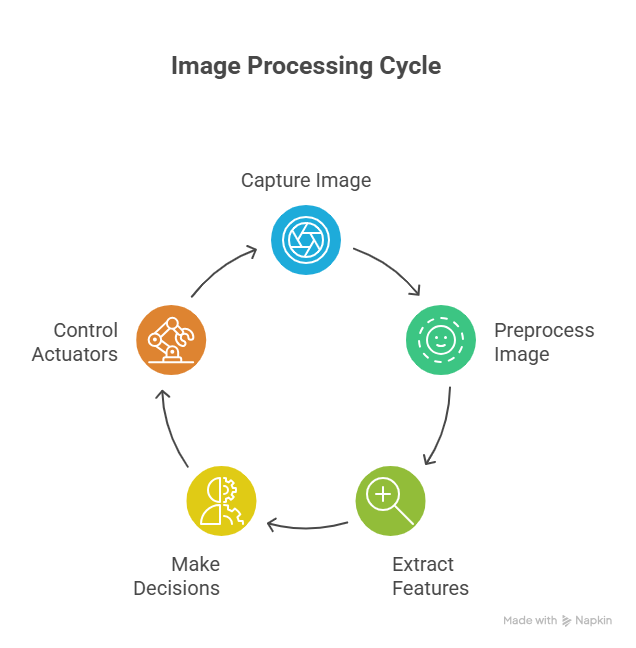

Machine vision refers to the ability of a robot to capture and process images to make decisions. It involves:

- Image Acquisition: Capturing visual data using cameras or sensors.

- Image Processing: Analyzing images to extract meaningful information.

- Decision Making: Using processed information to control actuators or trigger actions.

Example: A warehouse robot detects packages using a camera and sorts them based on size and color.

2. Key Components of Machine Vision in Robotics

| Component | Function | Example Application |

| Camera Sensor | Captures images or video | RGB cameras, depth cameras, stereo vision |

| Image Processing Unit | Processes raw image data | Raspberry Pi, NVIDIA Jetson |

| Algorithms | Detect features, objects, patterns | OpenCV, TensorFlow, YOLO |

| Actuation System | Executes decisions based on vision | Robot arm picks objects, mobile robot navigates |

3. Image Processing Techniques

- Preprocessing:

- Noise Reduction: Gaussian blur, median filters.

- Image Enhancement: Adjust contrast, brightness, or histogram equalization.

- Resizing & Cropping: Prepare images for analysis.

- Segmentation:

- Separate foreground objects from background.

- Techniques: Thresholding, edge detection, contour finding.

- Feature Extraction:

- Detect edges, corners, textures, or patterns.

- Example: Canny edge detection, SIFT/SURF for feature matching.

- Object Detection & Recognition:

- Identify objects in an image.

- Libraries: OpenCV, TensorFlow, YOLO.

- Use Case: Detecting obstacles for autonomous navigation.

- Tracking & Motion Analysis:

- Track moving objects across frames.

- Algorithms: Kalman filter, optical flow.

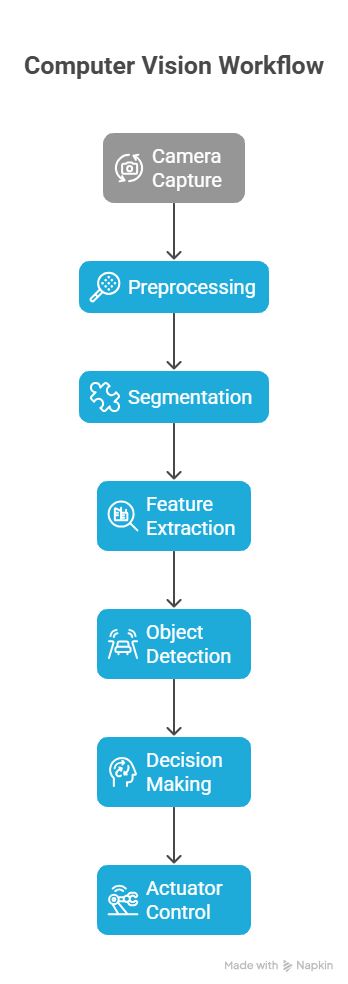

4. Machine Vision Pipeline for Robotics

Description: Robots continuously acquire images, process them, identify features or objects, and make decisions in real-time for navigation or manipulation.

5. Applications of Machine Vision in Robotics

| Application | Description | Real-World Example |

| Autonomous Navigation | Detect obstacles, lanes, and landmarks | Self-driving cars |

| Industrial Inspection | Identify defects on assembly lines | Quality control in factories |

| Object Sorting & Manipulation | Detect and pick objects based on size, color, or shape | Warehouse robots |

| Surveillance & Security | Monitor environment and detect intrusions | Security robots |

| Gesture & Human Interaction | Recognize human gestures or postures | Service robots or assistive devices |

6. Beginner Project Example: Object Detection Robot

Objective: A robot detects and follows a colored object.

Components:

- Camera: USB RGB camera or Raspberry Pi Camera Module.

- Controller: Raspberry Pi or Arduino with image processing support.

- Actuators: Servo motors to control robot motion.

Step-by-Step Implementation:

- Capture Image: Read frames from camera in real-time.

- Preprocessing: Convert to HSV color space, apply Gaussian blur.

- Segmentation: Threshold the image to isolate the object color.

- Feature Extraction: Find contours or centroid of the object.

- Decision Making: Compute direction to move robot based on centroid position.

- Actuation: Send motor commands to follow the object.

curiositytech. provides full Python scripts with OpenCV and detailed wiring diagrams for this project.

7. Tools & Libraries for Robotics Image Processing

| Tool / Library | Function |

| OpenCV | Image acquisition, processing, and computer vision tasks |

| TensorFlow / PyTorch | Deep learning-based object detection & recognition |

| ROS + cv_bridge | Integrate camera input with ROS nodes |

| MATLAB | Image analysis, simulations, and prototyping |

| NVIDIA Jetson | Accelerated AI and image processing |

Practical Tip: Start with OpenCV for basic image processing, then gradually integrate deep learning models for advanced recognition tasks.

8. Tips for Mastering Machine Vision in Robotics

- Understand image representation: pixels, color spaces, and transformations.

- Start with simple object detection using OpenCV.

- Learn filtering, segmentation, and edge detection techniques.

- Gradually implement deep learning for object recognition.

- Test algorithms in simulation (Gazebo/V-REP) before real-world deployment.

Conclusion

Machine vision and image processing empower robots to perceive and interact intelligently with their surroundings. By mastering image acquisition, preprocessing, feature extraction, and object recognition, robotics engineers can create autonomous and intelligent systems curiositytech.inprovides tutorials, project guides, and simulations that make mastering these skills practical and accessible, allowing learners to develop real-world robotics applications confidently.