Introduction

Building a machine learning model in 2025 is just the start. The real difference between a good model and an excellent model lies in hyperparameter tuning.

At Curiosity Tech (Nagpur, Wardha Road, Gajanan Nagar), we teach learners that hyperparameters control model behavior, and tuning them properly is mandatory for high-performance ML models.

Think of hyperparameters as the dials on a machine. A small adjustment can drastically improve accuracy, reduce overfitting, and speed up convergence.

1. What Are Hyperparameters?

Definition: Hyperparameters are settings not learned from data, but defined before training the model.

Examples:

- Number of trees in a Random Forest

- Learning rate in gradient boosting or neural networks

- Kernel type in SVM

- Number of clusters in K-Means

Difference from Model Parameters:

- Model parameters → Learned during training (weights in Linear Regression)

- Hyper parameters → Set before training (like learning rate)

2. Why Hyperparameter Tuning is Important

- Improve model accuracy

- Reduce overfitting or underfitting

- Optimize training time

- Achieve production-ready performance

Scenario Storytelling: Arjun, a student at CuriosityTech Park, trained a Random Forest on customer churn data. Default hyperparameters gave 82% accuracy, but after tuning number of trees, max depth, and min samples split, the accuracy rose to 91%, demonstrating the power of systematic tuning.

3. Common Hyperparameter Tuning Techniques

| Technique | Description | Pros | Cons |

| Grid Search | Exhaustively tests all parameter combinations | Guaranteed to find optimal within grid | Computationally expensive |

| Random Search | Randomly samples combinations | Faster for large search space | Might miss global optimum |

| Bayesian Optimization | Uses past results to predict next hyperparameter set | Efficient, smarter search | More complex setup |

| Manual Tuning | Adjust parameters based on intuition | Useful for small datasets | Time-consuming, less systematic |

4. Stepwise Hyperparameter Tuning Workshop

Step 1: Choose the Model

Select a suitable algorithm for your task. Example: Random Forest for classification.

Step 2: Identify Hyperparameters

| Model | Important Hyperparameters |

| Random Forest | n_estimators, max_depth, min_samples_split, max_features |

| SVM | C, kernel, gamma |

| Gradient Boosting | learning_rate, n_estimators, max_depth |

At Curiosity Tech, students learn which hyperparameters most affect performance and why.

Step 3: Grid Search Example

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

param_grid = {

‘n_estimators’: [50, 100, 200],

‘max_depth’: [5, 10, 20],

‘min_samples_split’: [2, 5, 10]

}

grid_search = GridSearchCV(RandomForestClassifier(), param_grid, cv=5, scoring=’accuracy’)

grid_search.fit(X_train, y_train)

print(“Best Hyperparameters:”, grid_search.best_params_)

print(“Best Accuracy:”, grid_search.best_score_)

Observation: Grid Search tests all combinations to find the most effective hyperparameters.

Step 4: Random Search Example

from sklearn.model_selection import RandomizedSearchCV

param_dist = {

‘n_estimators’: [50, 100, 200, 300],

‘max_depth’: [5, 10, 20, None],

‘min_samples_split’: [2, 5, 10]

}

random_search = RandomizedSearchCV(RandomForestClassifier(), param_distributions=param_dist, n_iter=10, cv=5, scoring=’accuracy’)

random_search.fit(X_train, y_train)

print(“Best Hyperparameters:”, random_search.best_params_)

Insight: Random Search is faster for large parameter spaces while still finding near-optimal solutions.

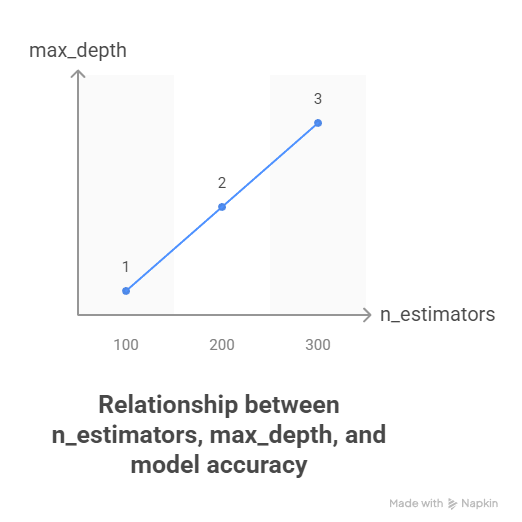

5. Visualizing Hyperparameter Impact

- X-axis → n_estimators

- Y-axis → max_depth

- Cell color → model accuracy

CuriosityTech students often visualize hyperparameter grids to understand which parameters have maximum impact.

6. Advanced Tips for Hyperparameter Tuning

- Nested Cross-Validation: Prevents overfitting while tuning parameters

- Early Stopping: Stop training if validation error stops improving (for Gradient Boosting/Neural Networks)

- Learning Rate Scheduling: Adjust learning rate dynamically during training

- Regularization Parameters: L1/L2 in linear models or dropout in neural networks

Curiosity Tech Insight: “In real-world projects, hyperparameter tuning is an iterative workshop rather than a one-time process.”

7. Real-World Applications

| Algorithm | Hyperparameter | Business Impact |

| Random Forest | n_estimators, max_depth | Improves churn prediction |

| XGBoost | learning_rate, max_depth | Optimizes sales forecasting |

| SVM | C, gamma | Enhances fraud detection accuracy |

| Neural Networks | batch_size, epochs, learning_rate | Improves image recognition models |

Students at Curiosity Tech often apply these techniques to datasets from finance, e-commerce, healthcare, ensuring practical mastery.

8. Common Mistakes in Hyperparameter Tuning

| Mistake | Consequence | Correct Approach |

| Using default hyperparameters | Suboptimal performance | Systematic tuning via Grid or Random Search |

| Overfitting to validation set | Poor generalization | Nested Cross-Validation |

| Ignoring training time | Long computation times | Reduce parameter search space |

| Not scaling features | Misleading results for some models | Preprocessing before tuning |

9. Key Takeaways

- Hyperparameters control model learning behavior and performance.

- Systematic tuning improves accuracy, reduces overfitting, and ensures production-ready models.

- Grid Search, Random Search, and Bayesian Optimization are industry-standard techniques.

- Visualizing hyperparameter impact aids understanding.

At Curiosity Tech Nagpur, our learners conduct hands-on workshops, adjusting hyperparameters on real datasets to observe real-time effects.

Conclusion

Hyperparameter tuning is the final lever to extract maximum performance from machine learning models. Mastery allows ML engineers to:

- Optimize models for production

- Reduce errors and improve stability

- Handle large-scale datasets efficiently

Curiosity Tech provides guided workshops, real-world datasets, and expert mentorship, preparing learners for 2025 ML challenges. Contact +91-9860555369 or contact@curiositytech.in to join a hyperparameter tuning workshop today.