Introduction

Modern cloud applications often require scalable, containerized deployments without managing the underlying servers. Google Cloud Run is a fully managed, serverless platform for running containers in GCP. It abstracts infrastructure management, auto-scales based on demand, and integrates seamlessly with other GCP services.

At Curiosity Tech, engineers are trained to leverage Cloud Run to deploy web applications, APIs, and microservices efficiently, while focusing on security, scalability, and cost optimization.

What is Google Cloud Run?

Cloud Run enables developers to deploy containerized applications that can serve HTTP requests or events. It supports any language or library that can run in a container, making it highly flexible.

Key Features:

- Fully managed: No server provisioning required

- Event-driven: Supports HTTP requests, Pub/Sub triggers, and Cloud Storage events

- Auto-scaling: Scales to zero when not in use

- Secure: Integrated IAM, HTTPS endpoints, and VPC connectivity

- Portable: Runs on any platform compatible with containers

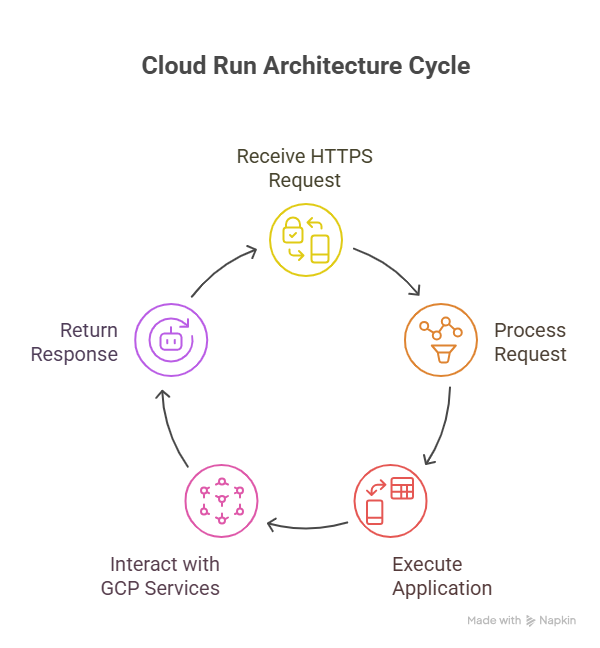

Diagram Concept: Cloud Run Architecture

Why Use Cloud Run?

| Benefit | Explanation |

| Serverless Container Deployment | Focus on application code, GCP manages infrastructure. |

| Scalable on Demand | Automatic scaling from zero to thousands of instances. |

| Cost-Efficient | Pay only for the compute resources used during execution. |

| Portability | Deploy containers locally, on GKE, or other cloud providers. |

| Secure by Default | HTTPS endpoints, IAM integration, and optional VPC connectivity. |

Core Concepts in Cloud Run

- Service: Logical deployment unit that represents your containerized application.

- Revision: Immutable snapshot of a service version.

- Traffic Splitting: Route a percentage of requests to specific revisions (useful for canary deployments).

- Concurrency: Number of requests a single container instance can handle simultaneously.

- Scaling: Automatically scales from 0 to N instances based on incoming requests.

Step-by-Step Deployment on Cloud Run

Step 1: Containerize Your Application

- Write your application in any language (Node.js, Python, Go, etc.).

- Create a Dockerfile to package the app.

# Example Node.js Dockerfile

FROM node:18

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

CMD [“node”, “index.js”]

Step 2: Push Container to Artifact Registry

docker build -t us-central1-docker.pkg.dev/my-project/my-repo/my-app:latest .

docker push us-central1-docker.pkg.dev/my-project/my-repo/my-app:latest

Step 3: Deploy to Cloud Run

gcloud run deploy my-app \

–image us-central1-docker.pkg.dev/my-project/my-repo/my-app:latest \

–platform managed \

–region us-central1 \

–allow-unauthenticated

- Platform Managed: Fully serverless deployment.

- Allow Unauthenticated: Make the service public or restrict with IAM.

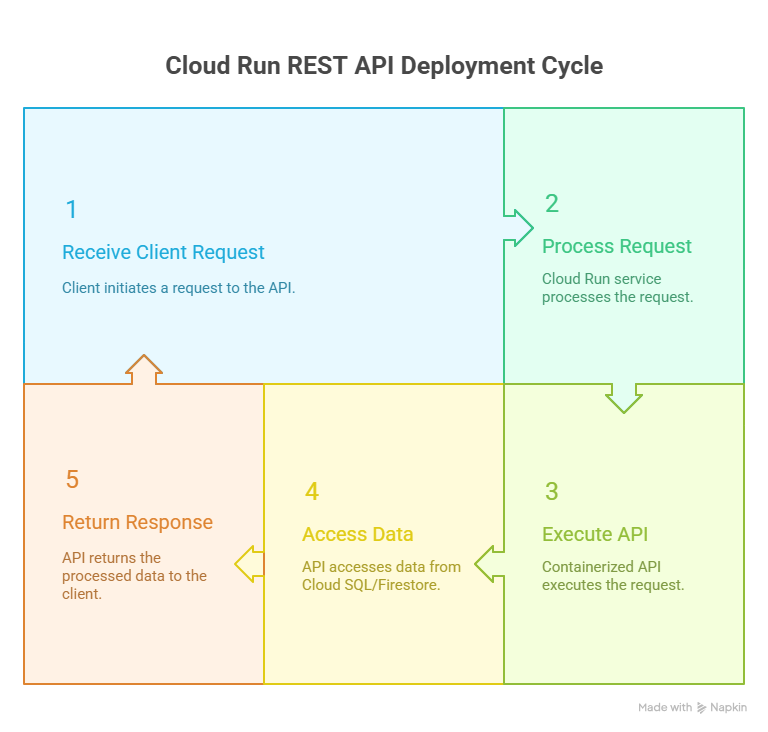

Practical Example: Deploying a REST API

Scenario: Deploy a simple API that manages a TODO list.

- Containerized Node.js API with endpoints: GET /todos, POST /todos.

- Push container to Artifact Registry.

- Deploy on Cloud Run with auto-scaling enabled.

- Secure API with IAM roles for authorized users only.

- Integrate with Cloud SQL for persistent storage.

Diagram Concept: Cloud Run REST API Architecture

Advanced Features & Best Practices

- Traffic Splitting & Canary Deployments

- Deploy multiple revisions and split traffic gradually to test new features.

- Deploy multiple revisions and split traffic gradually to test new features.

- Environment Variables & Secrets

- Use Cloud Secret Manager to store sensitive information like API keys.

- Use Cloud Secret Manager to store sensitive information like API keys.

- Concurrency Optimization

- Adjust –concurrency setting to optimize cost vs. performance.

- Adjust –concurrency setting to optimize cost vs. performance.

- Monitoring & Logging

- Integrate with Cloud Monitoring and Cloud Logging to track API performance and errors.

- Integrate with Cloud Monitoring and Cloud Logging to track API performance and errors.

- CI/CD Integration

- Automate deployments using Cloud Build to push container images and deploy to Cloud Run.

- Automate deployments using Cloud Build to push container images and deploy to Cloud Run.

Performance & Cost Optimization

| Aspect | Recommendation |

| Scaling | Use auto-scaling; scale to zero during low traffic to save cost. |

| Concurrency | Set concurrency >1 to maximize resource efficiency. |

| Container Size | Keep images lightweight to reduce cold start latency. |

| Regional Deployment | Deploy near your users to minimize latency. |

| Resource Limits | Set memory and CPU limits to prevent over-provisioning. |

Practical Scenario: Event-Driven Microservice

Scenario: Process uploaded images via Cloud Storage triggers.

- Trigger: Image uploaded to Cloud Storage.

- Cloud Run Service: Receives the event, processes the image (resizing, watermark).

- Output: Stores processed image in another bucket.

- Notification: Publish completion status via Pub/Sub or email.

This demonstrates event-driven, serverless architecture using Cloud Run without managing any servers.

Conclusion

Mastering Google Cloud Run allows cloud engineers to deploy scalable, secure, and containerized applications with minimal infrastructure management. By understanding containerization, deployment strategies, traffic management, and event-driven integrations, engineers can build robust serverless applications ready for enterprise workloads.

At Curiosity Tech, hands-on projects like Cloud Run deployments help engineers gain real-world experience, prepare for certifications, and develop production-ready cloud applications.