Introduction

In the fast-evolving world of deep learning, training models from scratch can be time-consuming and resource-intensive. Transfer learning addresses this challenge by allowing AI engineers to leverage pretrained models and adapt them to new tasks efficiently.

At CuriosityTech.in, learners in Nagpur use transfer learning to build image classifiers, NLP models, and multimodal AI applications, gaining both theoretical understanding and hands-on project experience that prepares them for AI careers.

1. What is Transfer Learning?

Transfer learning is the process of taking a model trained on one task and adapting it to a different but related task.

Key Advantages:

- Reduces training time

- Requires less data

- Improves model performance

Analogy: Learning French becomes easier if you already know Spanish—the base knowledge accelerates new learning.

Core Concept:

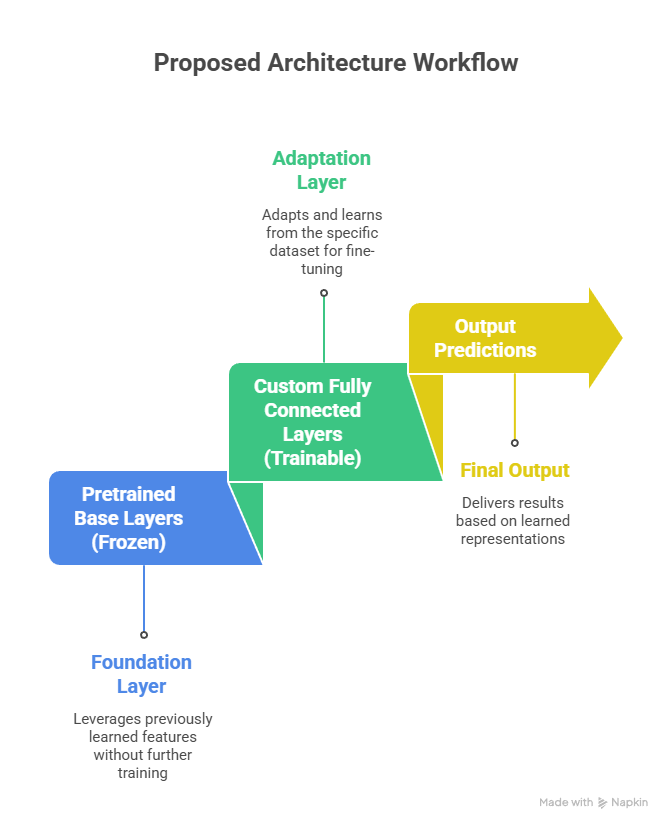

Pretrained Model (source task) → Fine-Tuning → Target Task Model

2. How Transfer Learning Works

- Select Pretrained Model: Models trained on large datasets like ImageNet, COCO, or Wikipedia

- Examples: VGG16, ResNet50, BERT, GPT, EfficientNet

- Examples: VGG16, ResNet50, BERT, GPT, EfficientNet

- Freeze Layers: Keep initial layers frozen to retain learned features

- Replace Output Layer: Adapt the final layers for the new target task

- Fine-Tune: Train selective layers on the new dataset to optimize performance

Hierarchical Representation (Textual):

3. Practical Example: Image Classification

Objective: Use a pretrained ResNet50 model to classify cats and dogs.

from tensorflow.keras.applications import ResNet50

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# Load pretrained ResNet50

base_model = ResNet50(weights=’imagenet’, include_top=False, input_shape=(224,224,3))

# Freeze base layers

for layer in base_model.layers:

layer.trainable = False

# Add custom layers

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(1024, activation=’relu’)(x)

predictions = Dense(2, activation=’softmax’)(x)

model = Model(inputs=base_model.input, outputs=predictions)

# Compile and train

model.compile(optimizer=’adam’, loss=’categorical_crossentropy’, metrics=[‘accuracy’])

# Data preprocessing

train_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(‘train_data’, target_size=(224,224), batch_size=32, class_mode=’categorical’)

model.fit(train_generator, epochs=10)

Observation: Students at CuriosityTech see that accuracy improves quickly compared to training from scratch, demonstrating the power of transfer learning.

4. Applications of Transfer Learning

| Domain | Examples |

| Computer Vision | Image classification, object detection, facial recognition |

| NLP | Sentiment analysis, text classification, chatbots |

| Healthcare | Disease detection from X-rays, MRI scans |

| Audio Processing | Speech recognition, emotion detection |

| Robotics | Pretrained perception models for navigation |

CuriosityTech Insight: Beginners often start with image classification, then progress to NLP and multimodal applications, building a diverse portfolio.

5. Fine-Tuning Techniques

- Layer Freezing: Keep initial layers frozen, fine-tune only higher layers

- Learning Rate Adjustment: Lower learning rates for pretrained layers

- Gradual Unfreezing: Slowly unfreeze layers as training progresses

- Data Augmentation: Boosts model generalization with limited datasets

Career Tip: Fine-tuning pretrained models is a highly valued skill for AI engineers, especially for roles in computer vision, NLP, and applied deep learning.

6. Human Story

A student at CuriosityTech initially trained a CNN from scratch for flower classification but struggled with limited dataset size. After applying transfer learning using VGG16, the student achieved 92% accuracy within a few epochs, learning how leveraging pretrained knowledge accelerates real-world AI projects.

7. Career Insights

- Transfer learning is industry-standard in computer vision and NLP

- Employers expect candidates to:

- Understand pretrained architectures

- Fine-tune models for custom tasks

- Apply data augmentation and regularization

- Optimize training time and resource usage

- Understand pretrained architectures

Portfolio Strategy: CuriosityTech.in encourages students to build multiple transfer learning projects, showing practical versatility across domains.

Conclusion

Transfer learning and fine-tuning pretrained models enable AI engineers to build high-performing models with limited data and computational resources. At CuriosityTech.in, learners gain hands-on experience with state-of-the-art models, preparing them to excel in real-world AI projects and interviews, bridging the gap between learning and career readiness.