Introduction

Navigation is the core capability of autonomous robots, enabling them to move efficiently in unknown environments. SLAM (Simultaneous Localization and Mapping) is a cornerstone technology in robotics that allows a robot to build a map of its environment while determining its own position in real-time. Understanding navigation and SLAM is essential for engineers working on autonomous vehicles, drones, and mobile robots.

At CuriosityTech.in, learners gain access to hands-on tutorials, simulation exercises, and project-based learning to master autonomous navigation and SLAM algorithms.

1. What is Autonomous Robot Navigation?

Autonomous navigation is the ability of a robot to move from a starting point to a destination without human intervention, avoiding obstacles, planning optimal paths, and adapting to environmental changes.

Core Requirements:

- Environmental perception (sensors: LiDAR, ultrasonic, cameras).

- Localization (knowing the robot’s position in the environment).

- Path planning (finding the optimal route).

- Motion control (driving motors accurately to follow the path).

2. Understanding SLAM (Simultaneous Localization and Mapping)

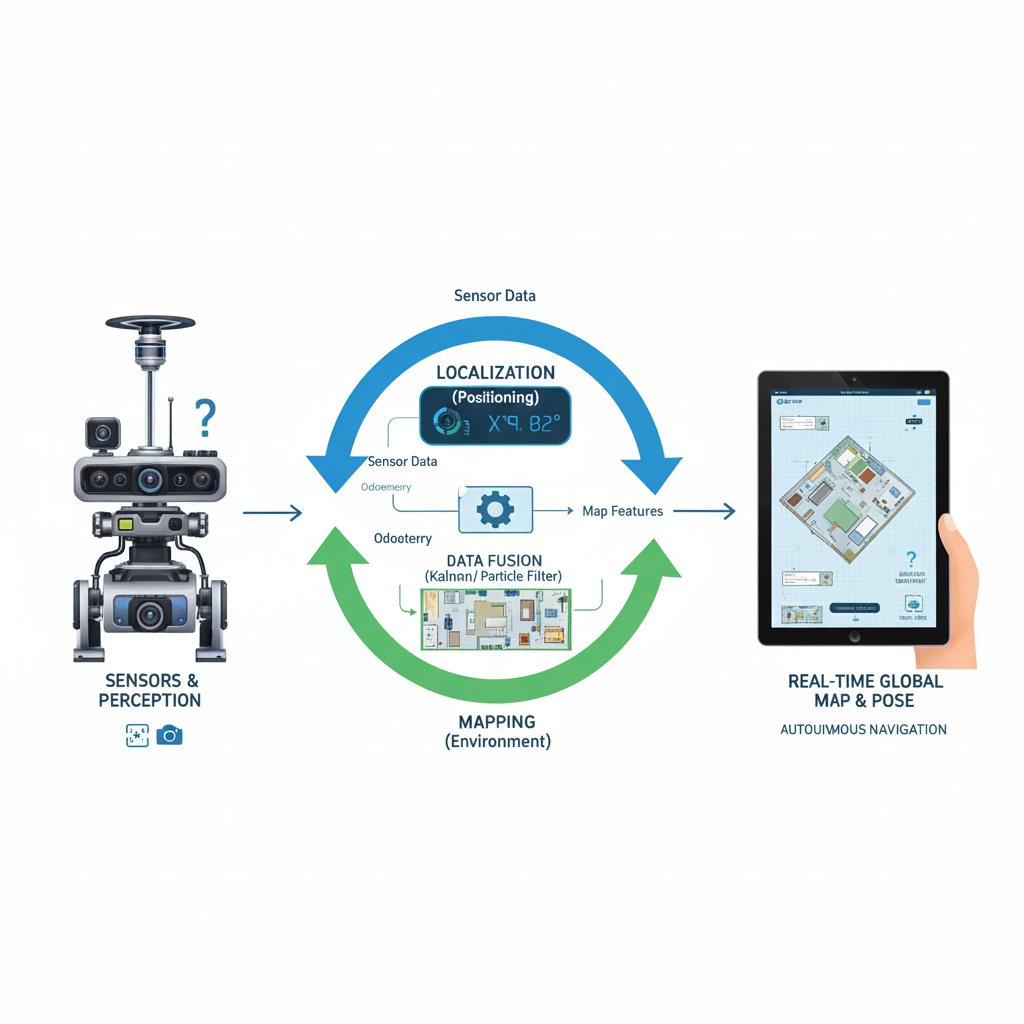

SLAM is a process where a robot constructs a map of an unknown environment while simultaneously keeping track of its location within that map.

Key Components:

- Mapping: Creating a representation of the environment using sensors.

- Localization: Estimating the robot’s position within the map.

- Sensor Fusion: Combining data from LiDAR, IMU, cameras, and wheel encoders.

- State Estimation: Using algorithms like Kalman Filter or Particle Filter to reduce uncertainty.

Diagram Idea: Robot with sensors creating a map, updating its position in real-time using SLAM feedback loops.

3. Common Sensors Used in SLAM

4. SLAM Algorithms

1. EKF-SLAM (Extended Kalman Filter)

- Uses Gaussian distribution to estimate robot’s pose and landmark positions.

- Good for small-scale, low-dimensional environments.

2. Particle Filter / FastSLAM

- Represents uncertainty using a set of particles.

- Scales well to larger, more complex environments.

3. Graph-Based SLAM

- Maps represented as nodes and edges (pose graph).

- Optimized using least-squares minimization.

4. Visual SLAM (vSLAM)

- Uses camera images to extract features and track robot motion.

- Suitable for GPS-denied environments.

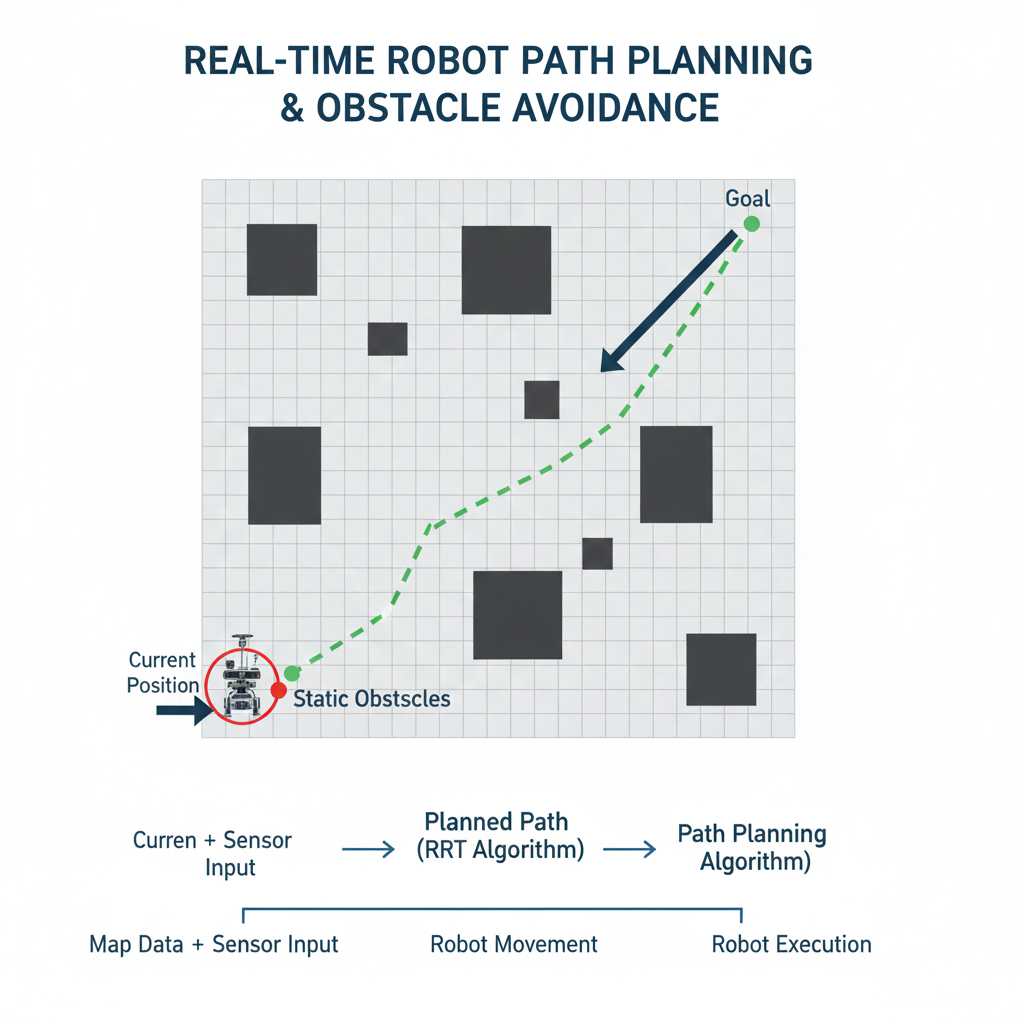

5. Path Planning Algorithms

| Algorithm | Description | Use Case |

| Dijkstra | Finds shortest path in a graph | Static obstacle maps |

| A* (A-Star) | Heuristic-based optimal pathfinding | Efficient path planning |

| RRT (Rapidly-Exploring Random Tree) | Probabilistic path generation | High-dimensional, dynamic environments |

| DWA (Dynamic Window Approach) | Local motion planning with velocity constraints | Mobile robot obstacle avoidance |

Diagram Idea: Map showing robot, obstacles, planned path (A* or RRT), and current robot position.

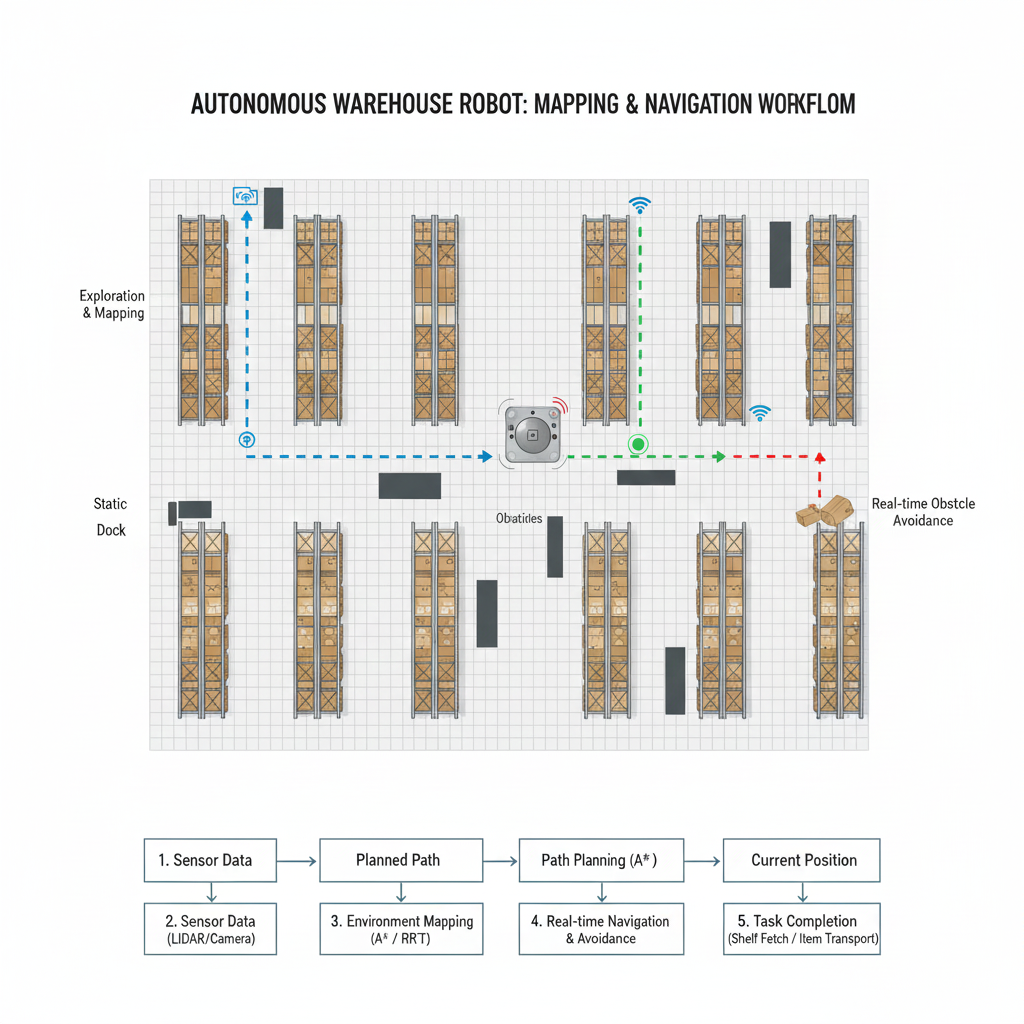

6. Practical Project Example: Autonomous Indoor Robot

Objective: Build a mobile robot that navigates a room autonomously using SLAM.

Components:

- Sensors: LiDAR for mapping, IMU for orientation, wheel encoders for odometry.

- Controller: Raspberry Pi for SLAM and path planning, Arduino for motor control.

- Actuators: DC motors with motor drivers.

Implementation Steps:

- Sensor Calibration: Calibrate LiDAR, IMU, and wheel encoders.

- SLAM Setup: Implement ROS-based SLAM package (Gmapping or Cartographer).

- Mapping: Move robot around environment to generate a map.

- Path Planning: Use A* or DWA to plan a path to the target.

- Autonomous Navigation: Robot follows path while avoiding obstacles in real-time.

- Feedback Loop: Continuously update map and robot position using SLAM.

CuriosityTech.in provides ROS simulation tutorials, code examples, and SLAM visualization guides for learners.

7. Challenges in Autonomous Navigation

- Sensor noise and drift (especially in IMU and odometry).

- Dynamic obstacles (moving humans or objects).

- Computational complexity for large maps.

- Real-time decision-making constraints.

Tips to Overcome Challenges:

- Fuse multiple sensors for robust perception.

- Use real-time optimization algorithms for path planning.

- Simulate robot behavior before hardware deployment.

- Regularly calibrate sensors and motors.

8. Real-World Applications

- Autonomous Warehouse Robots: Navigate warehouse aisles for inventory management.

- Self-Driving Cars: Use LiDAR, cameras, and radar for city navigation.

- Service Robots: Deliver items in hospitals or hotels.

- Exploration Robots: Mapping unknown terrains (Mars rovers, underwater robots).

Diagram Idea: Example showing autonomous warehouse robot mapping the environment and navigating shelves.

9. Learning Tips for SLAM and Navigation

- Start with simulation platforms like ROS + Gazebo.

- Implement basic odometry and sensor fusion before full SLAM.

- Use small-scale indoor maps for testing.

- Gradually integrate path planning algorithms.

- Study real-world autonomous systems for practical insights.

Conclusion

Autonomous navigation and SLAM are critical technologies in robotics, enabling robots to map environments, plan optimal paths, and operate independently. Mastery of SLAM algorithms, sensor fusion, and path planning empowers robotics engineers to build truly autonomous mobile systems. Platforms like CuriosityTech.in provide hands-on projects, simulation tools, and tutorials, allowing learners to bridge the gap between theoretical knowledge and practical autonomous robotics applications.